6

Arithmetic

This chapter discusses arithmetic computation in assembly language. By the end of this chapter, you should be able to translate arithmetic expressions and assignment statements from high-level languages like Pascal and C/C++ into x86-64 assembly language.

6.1 x86-64 Integer Arithmetic Instructions

Before you learn how to encode arithmetic expressions in assembly language, it would be a good idea to first discuss the remaining arithmetic instructions in the x86-64 instruction set. Previous chapters have covered most of the arithmetic and logical instructions, so this section covers the few remaining instructions you’ll need.

6.1.1 Sign- and Zero-Extension Instructions

Several arithmetic operations require sign- or zero-extended values before the operation. So let’s first consider the sign- and zero-extension instructions. The x86-64 provides several instructions to sign- or zero-extend a smaller number to a larger number. Table 6-1 lists instructions that will sign-extend the AL, AX, EAX, and RAX registers.

Table 6-1: Instructions for Extending AL, AX, EAX, and RAX

| Instruction | Explanation |

cbw |

Converts the byte in AL to a word in AX via sign extension |

cwd |

Converts the word in AX to a double word in DX:AX via sign extension |

cdq |

Converts the double word in EAX to a quad word in EDX:EAX via sign extension |

cqo |

Converts the quad word in RAX to an octal word in RDX:RAX via sign extension |

cwde |

Converts the word in AX to a double word in EAX via sign extension |

cdqe |

Converts the double word in EAX to a quad word in RAX via sign extension |

Note that the cwd (convert word to double word) instruction does not sign-extend the word in AX to a double word in EAX. Instead, it stores the HO word of the sign extension into the DX register (the notation DX:AX indicates that you have a double-word value, with DX containing the upper 16 bits and AX containing the lower 16 bits of the value). If you want the sign extension of AX to go into EAX, you should use the cwde (convert word to double word, extended) instruction. In a similar fashion, the cdq instruction sign-extends EAX into EDX:EAX. Use the cdqe instruction if you want to sign-extend EAX into RAX.

For general sign-extension operations, the x86-64 provides an extension of the mov instruction, movsx (move with sign extension), that copies data and sign-extends the data while copying it. The movsx instruction’s syntax is similar to that of mov:

movsxd dest, source ; If dest is 64 bits and source is 32 bits

movsx dest, source ; For all other operand combinationsThe big difference in syntax between these instructions and the mov instruction is that the destination operand must usually be larger than the source operand.1 For example, if the source operand is a byte, then the destination operand must be a word, dword, or qword. The destination operand must also be a register; the source operand, however, can be a memory location.2 The movsx instruction does not allow constant operands.

For whatever reason, MASM requires a different instruction mnemonic (instruction name) when sign-extending a 32-bit operand into a 64-bit register (movsxd rather than movsx).

To zero-extend a value, you can use the movzx instruction. It does not have the restrictions of movsx; as long as the destination operand is larger than the source operand, the instruction works fine. It allows 8 to 16, 32, or 64 bits, and 16 to 32 or 64 bits. There is no 32- to 64-bit version (it turns out this is unnecessary).

The x86-64 CPUs, for historical reasons, will always zero-extend a register from 32 bits to 64 bits when performing 32-bit operations. Therefore, to zero-extend a 32-bit register into a 64-bit register, you need only move the (32-bit) register into itself; for example:

mov eax, eax ; Zero-extends EAX into RAXZero-extending certain 8-bit registers (AL, BL, CL, and DL) into their corresponding 16-bit registers is easily accomplished without using movzx by loading the complementary HO register (AH, BH, CH, or DH) with 0. To zero-extend AX into DX:AX or EAX into EDX:EAX, all you need to do is load DX or EDX with 0.3

Because of instruction-encoding limitations, the x86-64 does not allow you to zero- or sign-extend the AH, BH, CH, or DH registers into any of the 64-bit registers.

6.1.2 The mul and imul Instructions

You’ve already seen a subset of the imul instructions available in the x86-64 instruction set (see “The imul Instruction” in Chapter 4). This section presents the extended-precision version of imul along with the unsigned mul instruction.

The multiplication instructions provide you with another taste of irregularity in the x86-64’s instruction set. Instructions like add, sub, and many others in the x86-64 instruction set support two operands, just like the mov instruction. Unfortunately, there weren’t enough bits in the original 8086 opcode byte to support all instructions, so the x86-64 treats the mul (unsigned multiply) and imul (signed integer multiply) instructions as single-operand instructions, just like the inc, dec, and neg instructions. Of course, multiplication is a two-operand function. To work around this fact, the x86-64 always assumes the accumulator (AL, AX, EAX, or RAX) is the destination operand.

Another problem with the mul and imul instructions is that you cannot use them to multiply the accumulator by a constant. Intel quickly discovered the need to support multiplication by a constant and added the more general versions of the imul instruction to overcome this problem. Nevertheless, you must be aware that the basic mul and imul instructions do not support the full range of operands as the imul appearing in Chapter 4 does.

The multiply instruction has two forms: unsigned multiplication (mul) and signed multiplication (imul). Unlike addition and subtraction, you need separate instructions for signed and unsigned operations.

The single-operand multiply instructions take the following forms:

Unsigned multiplication:

mul reg8 ; Returns AX

mul reg16 ; Returns DX:AX

mul reg32 ; Returns EDX:EAX

mul reg64 ; Returns RDX:RAX

mul mem8 ; Returns AX

mul mem16 ; Returns DX:AX

mul mem32 ; Returns EDX:EAX

mul mem64 ; Returns RDX:RAXSigned (integer) multiplication:

imul reg8 ; Returns AX

imul reg16 ; Returns DX:AX

imul reg32 ; Returns EDX:EAX

imul reg64 ; Returns RDX:RAX

imul mem8 ; Returns AX

imul mem16 ; Returns DX:AX

imul mem32 ; Returns EDX:EAX

imul mem64 ; Returns RDX:RAXThe result of multiplying two n-bit values may require as many as 2 × n bits. Therefore, if the operand is an 8-bit quantity, the result could require 16 bits. Likewise, a 16-bit operand produces a 32-bit result, a 32-bit operand produces 64 bits, and a 64-bit operand requires as many as 128 bits to hold the result. Table 6-2 lists the various computations.

Table 6-2: mul and imul Operations

| Instruction | Computes |

mul operand8 |

AX = AL × operand8 (unsigned) |

imul operand8 |

AX = AL × operand8 (signed) |

mul operand16 |

DX:AX = AX × operand16 (unsigned) |

imul operand16 |

DX:AX = AX × operand16 (signed) |

mul operand32 |

EDX:EAX = EAX × operand32 (unsigned) |

imul operand32 |

EDX:EAX = EAX × operand32 (signed) |

mul operand64 |

RDX:RAX = RAX × operand64 (unsigned) |

imul operand64 |

RDX:RAX = RAX × operand64 (signed) |

If an 8×8-, 16×16-, 32×32-, or 64×64-bit product requires more than 8, 16, 32, or 64 bits (respectively), the mul and imul instructions set the carry and overflow flags. mul and imul scramble the sign and zero flags.

You’ll use the single-operand mul and imul instructions quite a lot when you learn about extended-precision arithmetic in Chapter 8. Unless you’re doing multiprecision work, however, you’ll probably want to use the more generic multi-operand version of the imul instruction in place of the extended-precision mul or imul. However, the generic imul (see Chapter 4) is not a complete replacement for these two instructions; in addition to the number of operands, several differences exist. The following rules apply specifically to the generic (multi-operand) imul instruction:

- There isn’t an 8×8-bit multi-operand

imulinstruction available. - The generic

imulinstruction does not produce a 2×n-bit result, but truncates the result to n bits. That is, a 16×16-bit multiplication produces a 16-bit result. Likewise, a 32×32-bit multiplication produces a 32-bit result. These instructions set the carry and overflow flags if the result does not fit into the destination register.

6.1.3 The div and idiv Instructions

The x86-64 divide instructions perform a 128/64-bit division, a 64/32-bit division, a 32/16-bit division, or a 16/8-bit division. These instructions take the following forms:

div reg8

div reg16

div reg32

div reg64

div mem8

div mem16

div mem32

div mem64

idiv reg8

idiv reg16

idiv reg32

idiv reg64

idiv mem8

idiv mem16

idiv mem32

idiv mem64The div instruction is an unsigned division operation. If the operand is an 8-bit operand, div divides the AX register by the operand, leaving the quotient in AL and the remainder (modulo) in AH. If the operand is a 16-bit quantity, the div instruction divides the 32-bit quantity in DX:AX by the operand, leaving the quotient in AX and the remainder in DX. With 32-bit operands, div divides the 64-bit value in EDX:EAX by the operand, leaving the quotient in EAX and the remainder in EDX. Finally, with 64-bit operands, div divides the 128-bit value in RDX:RAX by the operand, leaving the quotient in RAX and the remainder in RDX.

There is no variant of the div or idiv instructions that allows you to divide a value by a constant. If you want to divide a value by a constant, you need to create a memory object (preferably in the .const section) that is initialized with the constant, and then use that memory value as the div/idiv operand. For example:

.const

ten dword 10

.

.

.

div ten ; Divides EDX:EAX by 10The idiv instruction computes a signed quotient and remainder. The syntax for the idiv instruction is identical to div (except for the use of the idiv mnemonic), though creating signed operands for idiv may require a different sequence of instructions prior to executing idiv than for div.

You cannot, on the x86-64, simply divide one unsigned 8-bit value by another. If the denominator is an 8-bit value, the numerator must be a 16-bit value. If you need to divide one unsigned 8-bit value by another, you must zero-extend the numerator to 16 bits by loading the numerator into the AL register and then moving 0 into the AH register. Failing to zero-extend AL before executing div may cause the x86-64 to produce incorrect results! When you need to divide two 16-bit unsigned values, you must zero-extend the AX register (which contains the numerator) into the DX register. To do this, just load 0 into the DX register. If you need to divide one 32-bit value by another, you must zero-extend the EAX register into EDX (by loading a 0 into EDX) before the division. Finally, to divide one 64-bit number by another, you must zero-extend RAX into RDX (for example, using an xor rdx, rdx instruction) prior to the division.

When dealing with signed integer values, you will need to sign-extend AL into AX, AX into DX, EAX into EDX, or RAX into RDX before executing idiv. To do so, use the cbw, cwd, cdq, or cqo instructions.4 Failure to do so may produce incorrect results.

The x86-64’s divide instructions have one other issue: you can get a fatal error when using this instruction. First, of course, you can attempt to divide a value by 0. Another problem is that the quotient may be too large to fit into the RAX, EAX, AX, or AL register. For example, the 16/8-bit division 8000h/2 produces the quotient 4000h with a remainder of 0. 4000h will not fit into 8 bits. If this happens, or you attempt to divide by 0, the x86-64 will generate a division exception or integer overflow exception. This usually means your program will crash. If this happens to you, chances are you didn’t sign- or zero-extend your numerator before executing the division operation. Because this error may cause your program to crash, you should be very careful about the values you select when using division.

The x86-64 leaves the carry, overflow, sign, and zero flags undefined after a division operation. Therefore, you cannot test for problems after a division operation by checking the flag bits.

6.1.4 The cmp Instruction, Revisited

As noted in “The cmp Instruction and Corresponding Conditional Jumps” in Chapter 2, the cmp instruction updates the x86-64’s flags according to the result of the subtraction operation (leftOperand - rightOperand). The x86-64 sets the flags in an appropriate fashion so that we can read this instruction as “compare leftOperand to rightOperand.” You can test the result of the comparison by using the conditional set instructions to check the appropriate flags in the FLAGS register (see “The setcc Instructions” on page 295) or the conditional jump instructions (Chapter 2 or Chapter 7).

Probably the first place to start when exploring the cmp instruction is to look at exactly how it affects the flags. Consider the following cmp instruction:

cmp ax, bxThis instruction performs the computation AX – BX and sets the flags depending on the result of the computation. The flags are set as follows (also see Table 6-3):

ZF

- The zero flag is set if and only if AX = BX. This is the only time AX – BX produces a 0 result. Hence, you can use the zero flag to test for equality or inequality.

SF

- The sign flag is set to 1 if the result is negative. At first glance, you might think that this flag would be set if AX is less than BX, but this isn’t always the case. If AX = 7FFFh and BX = –1 (0FFFFh), then subtracting AX from BX produces 8000h, which is negative (and so the sign flag will be set). So, for signed comparisons anyway, the sign flag doesn’t contain the proper status. For unsigned operands, consider AX = 0FFFFh and BX = 1. Here, AX is greater than BX, but their difference is 0FFFEh, which is still negative. As it turns out, the sign flag and the overflow flag, taken together, can be used for comparing two signed values.

OF

- The overflow flag is set after a

cmpoperation if the difference of AX and BX produced an overflow or underflow. As mentioned previously, the sign and overflow flags are both used when performing signed comparisons.

CF

- The carry flag is set after a

cmpoperation if subtracting BX from AX requires a borrow. This occurs only when AX is less than BX, where AX and BX are both unsigned values.

Table 6-3: Condition Code Settings After cmp

| Unsigned operands | Signed operands |

| ZF: Equality/inequality | ZF: Equality/inequality |

CF: Left < Right (C = 1)Left ≥ Right (C = 0) |

CF: No meaning |

| SF: No meaning | SF: See discussion in this section |

| OF: No meaning | OF: See discussion in this section |

Given that the cmp instruction sets the flags in this fashion, you can test the comparison of the two operands with the following flags:

cmp Left, RightFor signed comparisons, the SF (sign) and OF (overflow) flags, taken together, have the following meanings:

- If [(SF = 0) and (OF = 1)] or [(SF = 1) and (OF = 0)], then Left

<Right for a signed comparison. - If [(SF = 0) and (OF = 0)] or [(SF = 1) and (OF = 1)], then Left

≥Right for a signed comparison.

Note that (SF xor OF) is 1 if the left operand is less than the right operand. Conversely, (SF xor OF) is 0 if the left operand is greater than or equal to the right operand.

To understand why these flags are set in this manner, consider the examples in Table 6-4.

Table 6-4: Sign and Overflow Flag Settings After Subtraction

| Left | Minus | Right | SF | OF |

| 0FFFFh (–1) | – | 0FFFEh (–2) | 0 | 0 |

| 8000h (–32,768) | – | 0001h | 0 | 1 |

| 0FFFEh (–2) | – | 0FFFFh (–1) | 1 | 0 |

| 7FFFh (32767) | – | 0FFFFh (–1) | 1 | 1 |

Remember, the cmp operation is really a subtraction; therefore, the first example in Table 6-4 computes (–1) – (–2), which is (+1). The result is positive and an overflow did not occur, so both the S and O flags are 0. Because (SF xor OF) is 0, Left is greater than or equal to Right.

In the second example, the cmp instruction computes (–32,768) – (+1), which is (–32,769). Because a 16-bit signed integer cannot represent this value, the value wraps around to 7FFFh (+32,767) and sets the overflow flag. The result is positive (at least as a 16-bit value), so the CPU clears the sign flag. (SF xor OF) is 1 here, so Left is less than Right.

In the third example, cmp computes (–2) – (–1), which produces (–1). No overflow occurred, so the OF is 0, and the result is negative, so the SF is 1. Because (SF xor OF) is 1, Left is less than Right.

In the fourth (and final) example, cmp computes (+32,767) – (–1). This produces (+32,768), setting the overflow flag. Furthermore, the value wraps around to 8000h (–32,768), so the sign flag is set as well. Because (SF xor OF) is 0, Left is greater than or equal to Right.

6.1.5 The setcc Instructions

The setcc (set on condition) instructions set a single-byte operand (register or memory) to 0 or 1 depending on the values in the FLAGS register. The general formats for the setcc instructions are as follows:

setcc reg8

setcc mem8The setcc represents a mnemonic appearing in Tables 6-5, 6-6, and 6-7. These instructions store a 0 in the corresponding operand if the condition is false, and they store a 1 in the 8-bit operand if the condition is true.

Table 6-5: setcc Instructions That Test Flags

| Instruction | Description | Condition | Comments |

setc |

Set if carry | Carry = 1 | Same as setb, setnae |

setnc |

Set if no carry | Carry = 0 | Same as setnb, setae |

setz |

Set if zero | Zero = 1 | Same as sete |

setnz |

Set if not zero | Zero = 0 | Same as setne |

sets |

Set if sign | Sign = 1 | |

setns |

Set if no sign | Sign = 0 | |

seto |

Set if overflow | Overflow = 1 | |

setno |

Set if no overflow | Overflow = 0 | |

setp |

Set if parity | Parity = 1 | Same as setpe |

setpe |

Set if parity even | Parity = 1 | Same as setp |

setnp |

Set if no parity | Parity = 0 | Same as setpo |

setpo |

Set if parity odd | Parity = 0 | Same as setnp |

The setcc instructions in Table 6-5 simply test the flags without any other meaning attached to the operation. You could, for example, use setc to check the carry flag after a shift, rotate, bit test, or arithmetic operation.

The setp/setpe and setnp/setpo instructions check the parity flag. These instructions appear here for completeness, but this book will not spend much time discussing the parity flag; in modern code, it’s typically used only to check for an FPU not-a-number (NaN) condition.

The cmp instruction works synergistically with the setcc instructions. Immediately after a cmp operation, the processor flags provide information concerning the relative values of those operands. They allow you to see if one operand is less than, equal to, or greater than the other.

Two additional groups of setcc instructions are useful after a cmp operation. The first group deals with the result of an unsigned comparison (Table 6-6); the second group deals with the result of a signed comparison (Table 6-7).

Table 6-6: setcc Instructions for Unsigned Comparisons

| Instruction | Description | Condition | Comments |

seta |

Set if above (>) |

Carry = 0, Zero = 0 |

Same as setnbe |

setnbe |

Set if not below or equal (not ≤) |

Carry = 0, Zero = 0 |

Same as seta |

setae |

Set if above or equal (≥) |

Carry = 0 |

Same as setnc, setnb |

setnb |

Set if not below (not <) |

Carry = 0 |

Same as setnc, setae |

setb |

Set if below (<) |

Carry = 1 |

Same as setc, setnae |

setnae |

Set if not above or equal (not ≥) |

Carry = 1 |

Same as setc, setb |

setbe |

Set if below or equal (≤) |

Carry = 1 or Zero = 1 |

Same as setna |

setna |

Set if not above (not >) |

Carry = 1 or Zero = 1 |

Same as setbe |

sete |

Set if equal (==) |

Zero = 1 |

Same as setz |

setne |

Set if not equal (≠) |

Zero = 0 |

Same as setnz |

Table 6-7: setcc Instructions for Signed Comparisons

| Instruction | Description | Condition | Comments |

setg |

Set if greater (>) |

Sign == Overflow and

Zero == 0 |

Same as setnle |

setnle |

Set if not less than or equal (not ≤) |

Sign == Overflow or

Zero == 0 |

Same as setg |

setge |

Set if greater than or equal (≥) |

Sign == Overflow |

Same as setnl |

setnl |

Set if not less than (not <) |

Sign == Overflow |

Same as setge |

setl |

Set if less than (<) |

Sign ≠ Overflow |

Same as setnge |

setnge |

Set if not greater or equal (not ≥) |

Sign ≠ Overflow |

Same as setl |

setle |

Set if less than or equal (≤) |

Sign ≠ Overflow or

Zero == 1 |

Same as setng |

setng |

Set if not greater than (not >) |

Sign ≠ Overflow or

Zero == 1 |

Same as setle |

sete |

Set if equal (=) |

Zero == 1 |

Same as setz |

setne |

Set if not equal (≠) |

Zero == 0 |

Same as setnz |

The setcc instructions are particularly valuable because they can convert the result of a comparison to a Boolean value (false/true or 0/1). This is especially important when translating statements from a high-level language like Swift or C/C++ into assembly language. The following example shows how to use these instructions in this manner:

; bool = a <= b:

mov eax, a

cmp eax, b

setle bool ; bool is a byte variableBecause the setcc instructions always produce 0 or 1, you can use the results with the and and or instructions to compute complex Boolean values:

; bool = ((a <= b) && (d == e)):

mov eax, a

cmp eax, b

setle bl

mov eax, d

cmp eax, e

sete bh

and bh, bl

mov bool, bh6.1.6 The test Instruction

The x86-64 test instruction is to the and instruction what the cmp instruction is to sub. That is, the test instruction computes the logical AND of its two operands and sets the condition code flags based on the result; it does not, however, store the result of the logical AND back into the destination operand. The syntax for the test instruction is similar to and:

test operand1, operand2The test instruction sets the zero flag if the result of the logical AND operation is 0. It sets the sign flag if the HO bit of the result contains a 1. The test instruction always clears the carry and overflow flags.

The primary use of the test instruction is to check whether an individual bit contains a 0 or a 1. Consider the instruction test al, 1. This instruction logically ANDs AL with the value 1; if bit 0 of AL contains 0, the result will be 0 (setting the zero flag) because all the other bits in the constant 1 are 0. Conversely, if bit 0 of AL contains 1, then the result is not 0, so test clears the zero flag. Therefore, you can test the zero flag after this test instruction to see if bit 0 contains a 0 or a 1 (for example, using setz or setnz instructions, or the jz/jnz instructions).

The test instruction can also check whether all the bits in a specified set of bits contain 0. The instruction test al, 0fh sets the zero flag if and only if the LO 4 bits of AL all contain 0.

One important use of the test instruction is to check whether a register contains 0. The instruction test reg, reg, where both operands are the same register, will logically AND that register with itself. If the register contains 0, the result is 0 and the CPU will set the zero flag. However, if the register contains a nonzero value, logically ANDing that value with itself produces that same nonzero value, so the CPU clears the zero flag. Therefore, you can check the zero flag immediately after the execution of this instruction (for example, using the setz or setnz instructions or the jz and jnz instructions) to see if the register contains 0. Here are some examples:

test eax, eax

setz bl ; BL is set to 1 if EAX contains 0

.

.

.

test bl, bl

jz bxIs0

Do something if BL != 0

bxIs0:One major failing of the test instruction is that immediate (constant) operands can be no larger than 32 bits (as is the case with most instructions), which makes it difficult to use this instruction to test for set bits beyond bit position 31. For testing individual bits, you can use the bt (bit test) instruction (see “Instructions That Manipulate Bits” in Chapter 12). Otherwise, you’ll have to move the 64-bit constant into a register (the mov instruction does support 64-bit immediate operands) and then test your target register against the 64-bit constant value in the newly loaded register.

6.2 Arithmetic Expressions

Probably the biggest shock to beginners facing assembly language for the first time is the lack of familiar arithmetic expressions. Arithmetic expressions, in most high-level languages, look similar to their algebraic equivalents. For example:

x = y * z;In assembly language, you’ll need several statements to accomplish this same task:

mov eax, y

imul eax, z

mov x, eaxObviously, the HLL version is much easier to type, read, and understand. Although a lot of typing is involved, converting an arithmetic expression into assembly language isn’t difficult at all. By attacking the problem in steps, the same way you would solve the problem by hand, you can easily break any arithmetic expression into an equivalent sequence of assembly language statements.

6.2.1 Simple Assignments

The easiest expressions to convert to assembly language are simple assignments. Simple assignments copy a single value into a variable and take one of two forms:

variable = constantor

var1 = var2Converting the first form to assembly language is simple—just use this assembly language statement:

mov variable, constantThis mov instruction copies the constant into the variable.

The second assignment is slightly more complicated because the x86-64 doesn’t provide a memory-to-memory mov instruction. Therefore, to copy one memory variable into another, you must move the data through a register. By convention (and for slight efficiency reasons), most programmers tend to favor AL, AX, EAX, or RAX for this purpose. For example:

var1 = var2;becomes

mov eax, var2

mov var1, eaxassuming that var1 and var2 are 32-bit variables. Use AL if they are 8-bit variables, use AX if they are 16-bit variables, or use RAX if they are 64-bit variables.

Of course, if you’re already using AL, AX, EAX, or RAX for something else, one of the other registers will suffice. Regardless, you will generally use a register to transfer one memory location to another.

6.2.2 Simple Expressions

The next level of complexity is a simple expression. A simple expression takes the form

var1 = term1 op term2;where var1 is a variable, term1 and term2 are variables or constants, and op is an arithmetic operator (addition, subtraction, multiplication, and so on). Most expressions take this form. It should come as no surprise, then, that the x86-64 architecture was optimized for just this type of expression.

A typical conversion for this type of expression takes the form

mov eax, term1

op eax, term2

mov var1, eaxwhere op is the mnemonic that corresponds to the specified operation (for example, + is add, – is sub, and so forth).

Note that the simple expression var1 = const1 op const2; is easily handled with a compile-time expression and a single mov instruction. For example, to compute var1 = 5 + 3;, use the single instruction mov var1, 5 + 3.

You need to be aware of a few inconsistencies. When dealing with the (i)mul and (i)div instructions on the x86-64, you must use the AL, AX, EAX, and RAX registers and the AH, DX, EDX, and RDX registers. You cannot use arbitrary registers as you can with other operations. Also, don’t forget the sign-extension instructions if you’re performing a division operation to divide one 16-, 32-, or 64-bit number by another. Finally, don’t forget that some instructions may cause overflow. You may want to check for an overflow (or underflow) condition after an arithmetic operation.

Here are examples of common simple expressions:

; x = y + z:

mov eax, y

add eax, z

mov x, eax

; x = y - z:

mov eax, y

sub eax, z

mov x, eax

; x = y * z; (unsigned):

mov eax, y

mul z ; Don't forget this wipes out EDX

mov x, eax

; x = y * z; (signed):

mov eax, y

imul eax, z ; Does not affect EDX!

mov x, eax

; x = y div z; (unsigned div):

mov eax, y

xor edx, edx ; Zero-extend EAX into EDX

div z

mov x, eax

; x = y idiv z; (signed div):

mov eax, y

cdq ; Sign-extend EAX into EDX

idiv z

mov x, eax

; x = y % z; (unsigned remainder):

mov eax, y

xor edx, edx ; Zero-extend EAX into EDX

div z

mov x, edx ; Note that remainder is in EDX

; x = y % z; (signed remainder):

mov eax, y

cdq ; Sign-extend EAX into EDX

idiv z

mov x, edx ; Remainder is in EDXCertain unary operations also qualify as simple expressions, producing additional inconsistencies to the general rule. A good example of a unary operation is negation. In a high-level language, negation takes one of two possible forms:

var = –varor

var1 = –var2Note that var = –constant is really a simple assignment, not a simple expression. You can specify a negative constant as an operand to the mov instruction:

mov var, -14To handle var1 = –var1, use this single assembly language statement:

; var1 = -var1;

neg var1If two different variables are involved, use the following:

; var1 = -var2;

mov eax, var2

neg eax

mov var1, eax6.2.3 Complex Expressions

A complex expression is any arithmetic expression involving more than two terms and one operator. Such expressions are commonly found in programs written in a high-level language. Complex expressions may include parentheses to override operator precedence, function calls, array accesses, and so on. This section outlines the rules for converting such expressions.

A complex expression that is easy to convert to assembly language is one that involves three terms and two operators. For example:

w = w - y - z;Clearly the straightforward assembly language conversion of this statement requires two sub instructions. However, even with an expression as simple as this, the conversion is not trivial. There are actually two ways to convert the preceding statement into assembly language:

mov eax, w

sub eax, y

sub eax, z

mov w, eaxand

mov eax, y

sub eax, z

sub w, eaxThe second conversion, because it is shorter, looks better. However, it produces an incorrect result (assuming C-like semantics for the original statement). Associativity is the problem. The second sequence in the preceding example computes w = w – (y – z), which is not the same as w = (w – y) – z. How we place the parentheses around the subexpressions can affect the result. Note that if you are interested in a shorter form, you can use the following sequence:

mov eax, y

add eax, z

sub w, eaxThis computes w = w – (y + z), equivalent to w = (w – y) – z.

Precedence is another issue. Consider this expression:

x = w * y + z;Once again, we can evaluate this expression in two ways:

x = (w * y) + z;or

x = w * (y + z);By now, you’re probably thinking that this explanation is crazy. Everyone knows the correct way to evaluate these expressions is by the former form. However, you’d be wrong. The APL programming language, for example, evaluates expressions solely from right to left and does not give one operator precedence over another. Which way is “correct” depends entirely on how you define precedence in your arithmetic system.

Consider this expression:

x op1 y op2 zIf op1 takes precedence over op2, then this evaluates to (x op1 y) op2 z. Otherwise, if op2 takes precedence over op1, this evaluates to x op1 (y op2 z). Depending on the operators and operands involved, these two computations could produce different results.

Most high-level languages use a fixed set of precedence rules to describe the order of evaluation in an expression involving two or more different operators. Such programming languages usually compute multiplication and division before addition and subtraction. Those that support exponentiation (for example, FORTRAN and BASIC) usually compute that before multiplication and division. These rules are intuitive because almost everyone learns them before high school.

When converting expressions into assembly language, you must be sure to compute the subexpression with the highest precedence first. The following example demonstrates this technique:

; w = x + y * z:

mov ebx, x

mov eax, y ; Must compute y * z first because "*"

imul eax, z ; has higher precedence than "+"

add eax, ebx

mov w, eaxIf two operators appearing within an expression have the same precedence, you determine the order of evaluation by using associativity rules. Most operators are left-associative, meaning they evaluate from left to right. Addition, subtraction, multiplication, and division are all left-associative. A right-associative operator evaluates from right to left. The exponentiation operator in FORTRAN is a good example of a right-associative operator:

2**2**3is equal to

2**(2**3)not

(2**2)**3The precedence and associativity rules determine the order of evaluation. Indirectly, these rules tell you where to place parentheses in an expression to determine the order of evaluation. Of course, you can always use parentheses to override the default precedence and associativity. However, the ultimate point is that your assembly code must complete certain operations before others to correctly compute the value of a given expression. The following examples demonstrate this principle:

; w = x - y - z:

mov eax, x ; All the same operator precedence,

sub eax, y ; so we need to evaluate from left

sub eax, z ; to right because they are left-

mov w, eax ; associative

; w = x + y * z:

mov eax, y ; Must compute y * z first because

imul eax, z ; multiplication has a higher

add eax, x ; precedence than addition

mov w, eax

; w = x / y - z:

mov eax, x ; Here we need to compute division

cdq ; first because it has the highest

idiv y ; precedence

sub eax, z

mov w, eax

; w = x * y * z:

mov eax, y ; Addition and multiplication are

imul eax, z ; commutative; therefore, the order

imul eax, x ; of evaluation does not matter

mov w, eaxThe associativity rule has one exception: if an expression involves multiplication and division, it is generally better to perform the multiplication first. For example, given an expression of the form

w = x / y * z ; Note: This is (x * z) / y, not x / (y * z)it is usually better to compute x * z and then divide the result by y rather than divide x by y and multiply the quotient by z.

This approach is better for two reasons. First, remember that the imul instruction always produces a 64-bit result (assuming 32-bit operands). By doing the multiplication first, you automatically sign-extend the product into the EDX register so you do not have to sign-extend EAX prior to the division.

A second reason for doing the multiplication first is to increase the accuracy of the computation. Remember, (integer) division often produces an inexact result. For example, if you compute 5 / 2, you will get the value 2, not 2.5. Computing (5 / 2) × 3 produces 6. However, if you compute (5 × 3) / 2, you get the value 7, which is a little closer to the real quotient (7.5). Therefore, if you encounter an expression of the form

w = x / y * z;you can usually convert it to the following assembly code:

mov eax, x

imul z ; Note the use of extended imul!

idiv y

mov w, eaxIf the algorithm you’re encoding depends on the truncation effect of the division operation, you cannot use this trick to improve the algorithm. Moral of the story: always make sure you fully understand any expression you are converting to assembly language. If the semantics dictate that you must perform the division first, then do so.

Consider the following statement:

w = x – y * x; Because subtraction is not commutative, you cannot compute y * x and then subtract x from this result. Rather than use a straightforward multiplication-and-addition sequence, you’ll have to load x into a register, multiply y and x (leaving their product in a different register), and then subtract this product from x. For example:

mov ecx, x

mov eax, y

imul eax, x

sub ecx, eax

mov w, ecx This trivial example demonstrates the need for temporary variables in an expression. The code uses the ECX register to temporarily hold a copy of x until it computes the product of y and x. As your expressions increase in complexity, the need for temporaries grows. Consider the following C statement:

w = (a + b) * (y + z);Following the normal rules of algebraic evaluation, you compute the subexpressions inside the parentheses first (that is, the two subexpressions with the highest precedence) and set their values aside. When you’ve computed the values for both subexpressions, you can compute their product. One way to deal with a complex expression like this is to reduce it to a sequence of simple expressions whose results wind up in temporary variables. For example, you can convert the preceding single expression into the following sequence:

temp1 = a + b;

temp2 = y + z;

w = temp1 * temp2;Because converting simple expressions to assembly language is quite easy, it’s now a snap to compute the former complex expression in assembly. The code is shown here:

mov eax, a

add eax, b

mov temp1, eax

mov eax, y

add eax, z

mov temp2, eax

mov eax, temp1

imul eax, temp2

mov w, eaxThis code is grossly inefficient and requires that you declare a couple of temporary variables in your data segment. However, it is easy to optimize this code by keeping temporary variables, as much as possible, in x86-64 registers. By using x86-64 registers to hold the temporary results, this code becomes the following:

mov eax, a

add eax, b

mov ebx, y

add ebx, z

imul eax, ebx

mov w, eaxHere’s yet another example:

x = (y + z) * (a - b) / 10;This can be converted to a set of four simple expressions:

temp1 = (y + z)

temp2 = (a - b)

temp1 = temp1 * temp2

x = temp1 / 10You can convert these four simple expressions into the following assembly language statements:

.const

ten dword 10

.

.

.

mov eax, y ; Compute EAX = y + z

add eax, z

mov ebx, a ; Compute EBX = a - b

sub ebx, b

imul ebx ; This sign-extends EAX into EDX

idiv ten

mov x, eaxThe most important thing to keep in mind is that you should keep temporary values in registers for efficiency. Use memory locations to hold temporaries only if you’ve run out of registers.

Ultimately, converting a complex expression to assembly language is very similar to solving the expression by hand, except instead of actually computing the result at each stage of the computation, you simply write the assembly code that computes the result.

6.2.4 Commutative Operators

If op represents an operator, that operator is commutative if the following relationship is always true:

(A op B) = (B op A)As you saw in the previous section, commutative operators are nice because the order of their operands is immaterial, and this lets you rearrange a computation, often making it easier or more efficient. Often, rearranging a computation allows you to use fewer temporary variables. Whenever you encounter a commutative operator in an expression, you should always check whether you can use a better sequence to improve the size or speed of your code.

Tables 6-8 and 6-9, respectively, list the commutative and noncommutative operators you typically find in high-level languages.

Table 6-8: Common Commutative Binary Operators

| Pascal | C/C++ | Description |

+ |

+ |

Addition |

* |

* |

Multiplication |

and |

&& or & |

Logical or bitwise AND |

or |

|| or | |

Logical or bitwise OR |

xor |

^ |

(Logical or) bitwise exclusive-OR |

= |

== |

Equality |

<> |

!= |

Inequality |

Table 6-9: Common Noncommutative Binary Operators

| Pascal | C/C++ | Description |

- |

- |

Subtraction |

/ or div |

/ |

Division |

mod |

% |

Modulo or remainder |

< |

< |

Less than |

<= |

<= |

Less than or equal |

> |

> |

Greater than |

>= |

>= |

Greater than or equal |

6.3 Logical (Boolean) Expressions

Consider the following expression from a C/C++ program:

b = ((x == y) && (a <= c)) || ((z - a) != 5);Here, b is a Boolean variable, and the remaining variables are all integers.

Although it takes only a single bit to represent a Boolean value, most assembly language programmers allocate a whole byte or word to represent Boolean variables. Most programmers (and, indeed, some programming languages like C) choose 0 to represent false and anything else to represent true. Some people prefer to represent true and false with 1 and 0 (respectively) and not allow any other values. Others select all 1 bits (0FFFF_FFFF_FFFF_FFFFh, 0FFFF_FFFFh, 0FFFFh, or 0FFh) for true and 0 for false. You could also use a positive value for true and a negative value for false. All these mechanisms have their advantages and drawbacks.

Using only 0 and 1 to represent false and true offers two big advantages. First, the setcc instructions produce these results, so this scheme is compatible with those instructions. Second, the x86-64 logical instructions (and, or, xor, and, to a lesser extent, not) operate on these values exactly as you would expect. That is, if you have two Boolean variables a and b, then the following instructions perform the basic logical operations on these two variables:

; d = a AND b:

mov al, a

and al, b

mov d, al

; d = a || b:

mov al, a

or al, b

mov d, al

; d = a XOR b:

mov al, a

xor al, b

mov d, al

; b = NOT a:

mov al, a ; Note that the NOT instruction does not

not al ; properly compute AL = NOT all by itself.

and al, 1 ; That is, (NOT 0) does not equal 1. The AND

mov b, al ; instruction corrects this problem

mov al, a ; Another way to do b = NOT a;

xor al, 1 ; Inverts bit 0

mov b, alAs pointed out here, the not instruction will not properly compute logical negation. The bitwise not of 0 is 0FFh, and the bitwise not of 1 is 0FEh. Neither result is 0 or 1. However, by ANDing the result with 1, you get the proper result. Note that you can implement the not operation more efficiently by using the xor al, 1 instruction because it affects only the LO bit.

As it turns out, using 0 for false and anything else for true has a lot of subtle advantages. Specifically, the test for true or false is often implicit in the execution of any logical instruction. However, this mechanism suffers from a big disadvantage: you cannot use the x86-64 and, or, xor, and not instructions to implement the Boolean operations of the same name. Consider the two values 55h and 0AAh. They’re both nonzero, so they both represent the value true. However, if you logically AND 55h and 0AAh together by using the x86-64 and instruction, the result is 0. True AND true should produce true, not false. Although you can account for situations like this, it usually requires a few extra instructions and is somewhat less efficient when computing Boolean operations.

A system that uses nonzero values to represent true and 0 to represent false is an arithmetic logical system. A system that uses two distinct values like 0 and 1 to represent false and true is called a Boolean logical system, or simply a Boolean system. You can use either system, as convenient. Consider again this Boolean expression:

b = ((x == y) and (a <= d)) || ((z - a) != 5);The resulting simple expressions might be as follows:

mov eax, x

cmp eax, y

sete al ; AL = x == y;

mov ebx, a

cmp ebx, d

setle bl ; BL = a <= d;

and bl, al ; BL = (x = y) and (a <= d);

mov eax, z

sub eax, a

cmp eax, 5

setne al

or al, bl ; AL = ((x == y) && (a <= d)) ||

mov b, al ; ((z - a) != 5);When working with Boolean expressions, don’t forget that you might be able to optimize your code by simplifying them with algebraic transformations. In Chapter 7, you’ll also see how to use control flow to calculate a Boolean result, which is generally quite a bit more efficient than using complete Boolean evaluation, as the examples in this section teach.

6.4 Machine and Arithmetic Idioms

An idiom is an idiosyncrasy (a peculiarity). Several arithmetic operations and x86-64 instructions have idiosyncrasies that you can take advantage of when writing assembly language code. Some people refer to the use of machine and arithmetic idioms as tricky programming that you should always avoid in well-written programs. While it is wise to avoid tricks just for the sake of tricks, many machine and arithmetic idioms are well known and commonly found in assembly language programs. You will see some important idioms all the time, so it makes sense to discuss them.

6.4.1 Multiplying Without mul or imul

When multiplying by a constant, you can sometimes write faster code by using shifts, additions, and subtractions in place of multiplication instructions.

Remember, a shl instruction computes the same result as multiplying the specified operand by 2. Shifting to the left two bit positions multiplies the operand by 4. Shifting to the left three bit positions multiplies the operand by 8. In general, shifting an operand to the left n bits multiplies it by 2n. You can multiply any value by a constant by using a series of shifts and additions or shifts and subtractions. For example, to multiply the AX register by 10, you need only multiply it by 8 and then add two times the original value. That is, 10 × AX = 8 × AX + 2 × AX. The code to accomplish this is as follows:

shl ax, 1 ; Multiply AX by 2

mov bx, ax ; Save 2 * AX for later

shl ax, 2 ; Multiply AX by 8 (*4 really,

; but AX contains *2)

add ax, bx ; Add in AX * 2 to AX * 8 to get AX * 10If you look at the instruction timings, the preceding shift-and-add example requires fewer clock cycles on some processors in the 80x86 family than the mul instruction. Of course, the code is somewhat larger (by a few bytes), but the performance improvement is usually worth it.

You can also use subtraction with shifts to perform a multiplication operation. Consider the following multiplication by 7:

mov ebx, eax ; Save EAX * 1

shl eax, 3 ; EAX = EAX * 8

sub eax, ebx ; EAX * 8 - EAX * 1 is EAX * 7A common error that beginning assembly language programmers make is subtracting or adding 1 or 2 rather than EAX × 1 or EAX × 2. The following does not compute EAX × 7:

shl eax, 3

sub eax, 1It computes (8 × EAX) – 1, something entirely different (unless, of course, EAX = 1). Beware of this pitfall when using shifts, additions, and subtractions to perform multiplication operations.

You can also use the lea instruction to compute certain products. The trick is to use the scaled-index addressing modes. The following examples demonstrate some simple cases:

lea eax, [ecx][ecx] ; EAX = ECX * 2

lea eax, [eax][eax * 2] ; EAX = ECX * 3

lea eax, [eax * 4] ; EAX = ECX * 4

lea eax, [ebx][ebx * 4] ; EAX = EBX * 5

lea eax, [eax * 8] ; EAX = EAX * 8

lea eax, [edx][edx * 8] ; EAX = EDX * 9As time has progressed, Intel (and AMD) has improved the performance of the imul instruction to the point that it rarely makes sense to try to improve performance by using strength-reduction optimizations such as substituting shifts and additions for a multiplication. You should consult the Intel and AMD documentation (particularly the section on instruction timing) to see if a multi-instruction sequence is faster. Generally, a single shift instruction (for multiplication by a power of 2) or lea is going to produce better results than imul; beyond that, it’s best to measure and see.

6.4.2 Dividing Without div or idiv

Just as the shl instruction is useful for simulating a multiplication by a power of 2, the shr and sar instructions can simulate a division by a power of two. Unfortunately, you cannot easily use shifts, additions, and subtractions to perform division by an arbitrary constant. Therefore, this trick is useful only when dividing by powers of 2. Also, don’t forget that the sar instruction rounds toward negative infinity, unlike the idiv instruction, which rounds toward 0.

You can also divide by a value by multiplying by its reciprocal. Because the mul instruction is faster than the div instruction, multiplying by a reciprocal is usually faster than division.

To multiply by a reciprocal when dealing with integers, we must cheat. If you want to multiply by 1/10, there is no way you can load the value 1/10 into an x86-64 integer register prior to performing the multiplication. However, we could multiply 1/10 by 10, perform the multiplication, and then divide the result by 10 to get the final result. Of course, this wouldn’t buy you anything; in fact, it would make things worse because you’re now doing a multiplication by 10 as well as a division by 10. However, suppose you multiply 1/10 by 65,536 (6554), perform the multiplication, and then divide by 65,536. This would still perform the correct operation, and, as it turns out, if you set up the problem correctly, you can get the division operation for free. Consider the following code that divides AX by 10:

mov dx, 6554 ; 6554 = round(65,536 / 10)

mul dxThis code leaves AX/10 in the DX register.

To understand how this works, consider what happens when you use the mul instruction to multiply AX by 65,536 (1_0000h). This moves AX into DX and sets AX to 0 (a multiplication by 1_0000h is equivalent to a shift left by 16 bits). Multiplying by 6554 (65,536 divided by 10) puts AX divided by 10 into the DX register. Because mul is faster than div, this technique runs a little faster than using division.

Multiplying by a reciprocal works well when you need to divide by a constant. You could even use this approach to divide by a variable, but the overhead to compute the reciprocal pays off only if you perform the division many, many times by the same value.

6.4.3 Implementing Modulo-N Counters with AND

If you want to implement a counter variable that counts up to 2n – 1 and then resets to 0, use the following code:

inc CounterVar

and CounterVar, n_bitswhere n_bits is a binary value containing n bits of 1s right-justified in the number. For example, to create a counter that cycles between 0 and 15 (24 – 1), you could use the following:

inc CounterVar

and CounterVar, 00001111b6.5 Floating-Point Arithmetic

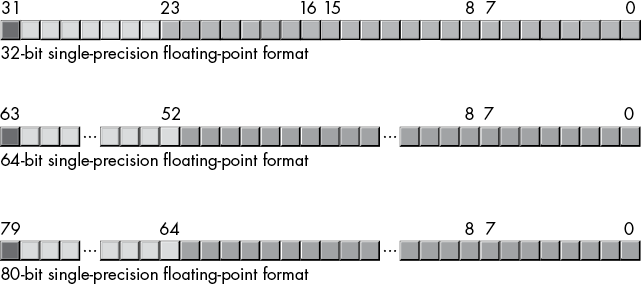

Integer arithmetic does not let you represent fractional numeric values. Therefore, modern CPUs support an approximation of real arithmetic: floating-point arithmetic. To represent real numbers, most floating-point formats employ scientific notation and use a certain number of bits to represent a mantissa and a smaller number of bits to represent an exponent.

For example, in the number 3.456e+12, the mantissa consists of 3.456, and the exponent digits are 12. Because the number of bits is fixed in computer-based representations, computers can represent only a certain number of digits (known as significant digits) in the mantissa. For example, if a floating-point representation could handle only three significant digits, then the fourth digit in 3.456e+12 (the 6) could not be accurately represented with that format, as three significant digits can represent only 3.45e+12 correctly.

Because computer-based floating-point representations also use a finite number of bits to represent the exponent, it also has a limited range of values, ranging from 10±38 for the single-precision format to 10±308 for the double-precision format (and up to 10±4932 for the extended-precision format). This is known as the dynamic range of the value.

A big problem with floating-point arithmetic is that it does not follow the standard rules of algebra. Normal algebraic rules apply only to infinite-precision arithmetic.

Consider the simple statement x = x + 1, where x is an integer. On any modern computer, this statement follows the normal rules of algebra as long as overflow does not occur. That is, this statement is valid only for certain values of x (minint ≤ x < maxint). Most programmers do not have a problem with this because they are well aware that integers in a program do not follow the standard algebraic rules (for example, 5 / 2 does not equal 2.5).

Integers do not follow the standard rules of algebra because the computer represents them with a finite number of bits. You cannot represent any of the (integer) values above the maximum integer or below the minimum integer. Floating-point values suffer from this same problem, only worse. After all, integers are a subset of real numbers. Therefore, the floating-point values must represent the same infinite set of integers. However, an infinite number of real values exists between any two integer values. In addition to having to limit your values between a maximum and minimum range, you cannot represent all the values between any pair of integers, either.

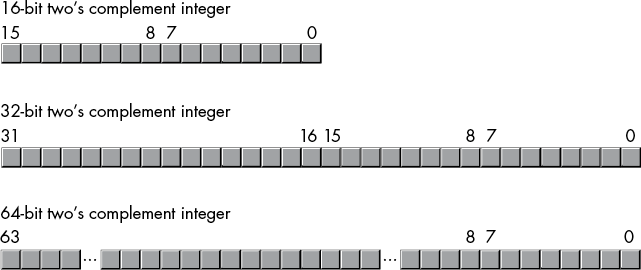

To demonstrate the impact of limited-precision arithmetic, we will adopt a simplified decimal floating-point format for our examples. Our floating-point format will provide a mantissa with three significant digits and a decimal exponent with two digits. The mantissa and exponents are both signed values, as shown in Figure 6-1.

Figure 6-1: A floating-point format

When adding and subtracting two numbers in scientific notation, we must adjust the two values so that their exponents are the same. Multiplication and division don’t require the exponents to be the same; instead, the exponent after a multiplication is the sum of the two operand exponents, and the exponent after a division is the difference of the dividend and divisor’s exponents.

For example, when adding 1.2e1 and 4.5e0, we must adjust the values so they have the same exponent. One way to do this is to convert 4.5e0 to 0.45e1 and then add. This produces 1.65e1. Because the computation and result require only three significant digits, we can compute the correct result via the representation shown in Figure 6-1. However, suppose we want to add the two values 1.23e1 and 4.56e0. Although both values can be represented using the three-significant-digit format, the computation and result do not fit into three significant digits. That is, 1.23e1 + 0.456e1 requires four digits of precision in order to compute the correct result of 1.686, so we must either round or truncate the result to three significant digits. Rounding generally produces the most accurate result, so let’s round the result to obtain 1.69e1.

In fact, the rounding does not occur after adding the two values together (that is, producing the sum 1.686e1 and then rounding this to 1.69e1). The rounding actually occurs when converting 4.56e0 to 0.456e1, because the value 0.456e1 requires four digits of precision to maintain. Therefore, during the conversion, we have to round it to 0.46e1 so that the result fits into three significant digits. Then, the sum of 1.23e1 and 0.46e1 produces the final (rounded) sum of 1.69e1.

As you can see, the lack of precision (the number of digits or bits we maintain in a computation) affects the accuracy (the correctness of the computation).

In the addition/subtraction example, we were able to round the result because we maintained four significant digits during the calculation (specifically, when converting 4.56e0 to 0.456e1). If our floating-point calculation had been limited to three significant digits during computation, we would have had to truncate the last digit of the smaller number, obtaining 0.45e1, resulting in a sum of 1.68e1, a value that is even less accurate.

To improve the accuracy of floating-point calculations, it is useful to maintain one or more extra digits for use during the calculation (such as the extra digit used to convert 4.56e0 to 0.456e1). Extra digits available during a computation are known as guard digits (or guard bits in the case of a binary format). They greatly enhance accuracy during a long chain of computations.

In a sequence of floating-point operations, the error can accumulate and greatly affect the computation itself. For example, suppose we were to add 1.23e3 to 1.00e0. Adjusting the numbers so their exponents are the same before the addition produces 1.23e3 + 0.001e3. The sum of these two values, even after rounding, is 1.23e3. This might seem perfectly reasonable to you; after all, we can maintain only three significant digits, so adding in a small value shouldn’t affect the result at all. However, suppose we were to add 1.00e0 to 1.23e3 10 times.5 The first time we add 1.00e0 to 1.23e3, we get 1.23e3. Likewise, we get this same result the second, third, fourth . . . and tenth times when we add 1.00e0 to 1.23e3. On the other hand, had we added 1.00e0 to itself 10 times, then added the result (1.00e1) to 1.23e3, we would have gotten a different result, 1.24e3. This is an important fact to know about limited-precision arithmetic:

The order of evaluation can affect the accuracy of the result.

You will get more accurate results if the relative magnitudes (the exponents) are close to one another when adding and subtracting floating-point values. If you are performing a chain calculation involving addition and subtraction, you should attempt to group the values appropriately.

Another problem with addition and subtraction is that you can wind up with false precision. Consider the computation 1.23e0 – 1.22e0, which produces 0.01e0. Although the result is mathematically equivalent to 1.00e – 2, this latter form suggests that the last two digits are exactly 0. Unfortunately, we have only a single significant digit at this time (remember, the original result was 0.01e0, and those two leading 0s were significant digits). Indeed, some floating-point unit (FPU) or software packages might actually insert random digits (or bits) into the LO positions. This brings up a second important rule concerning limited-precision arithmetic:

Subtracting two numbers with the same signs (or adding two numbers with different signs) can produce high-order significant digits (bits) that are 0. This reduces the number of significant digits (bits) by a like amount in the final result.

By themselves, multiplication and division do not produce particularly poor results. However, they tend to multiply any error that already exists in a value. For example, if you multiply 1.23e0 by 2, when you should be multiplying 1.24e0 by 2, the result is even less accurate. This brings up a third important rule when working with limited-precision arithmetic:

When performing a chain of calculations involving addition, subtraction, multiplication, and division, try to perform the multiplication and division operations first.

Often, by applying normal algebraic transformations, you can arrange a calculation so the multiply and divide operations occur first. For example, suppose you want to compute x * (y + z). Normally, you would add y and z together and multiply their sum by x. However, you will get a little more accuracy if you transform x * (y + z) to get x * y + x * z and compute the result by performing the multiplications first.6

Multiplication and division are not without their own problems. When two very large or very small numbers are multiplied, it is quite possible for overflow or underflow to occur. The same situation occurs when dividing a small number by a large number, or dividing a large number by a small (fractional) number. This brings up a fourth rule you should attempt to follow when multiplying or dividing values:

When multiplying and dividing sets of numbers, try to arrange the multiplications so that they multiply large and small numbers together; likewise, try to divide numbers that have the same relative magnitudes.

Given the inaccuracies present in any computation (including converting an input string to a floating-point value), you should never compare two floating-point values to see if they are equal. In a binary floating-point format, different computations that produce the same (mathematical) result may differ in their least significant bits. For example, 1.31e0 + 1.69e0 should produce 3.00e0. Likewise, 1.50e0 + 1.50e0 should produce 3.00e0. However, if you were to compare (1.31e0 + 1.69e0) against (1.50e0 + 1.50e0), you might find out that these sums are not equal to one another. The test for equality succeeds if and only if all bits (or digits) in the two operands are exactly the same. Because this is not necessarily true after two different floating-point computations that should produce the same result, a straight test for equality may not work. Instead, you should use the following test:

if Value1 >= (Value2 - error) and Value1 <= (Value2 + error) then ...Another common way to handle this same comparison is to use a statement of this form:

if abs(Value1 - Value2) <= error then ...error should be a value slightly greater than the largest amount of error that will creep into your computations. The exact value will depend on the particular floating-point format you use. Here is the final rule we will state in this section:

When comparing two floating-point numbers, always compare one value to see if it is in the range given by the second value plus or minus a small error value.

Many other little problems can occur when using floating-point values. This book can point out only some of the major problems and make you aware that you cannot treat floating-point arithmetic like real arithmetic because of the inaccuracies present in limited-precision arithmetic. A good text on numerical analysis or even scientific computing can help fill in the details. If you are going to be working with floating-point arithmetic in any language, you should take the time to study the effects of limited-precision arithmetic on your computations.

6.5.1 Floating-Point on the x86-64

When the 8086 CPU first appeared in the late 1970s, semiconductor technology was not to the point where Intel could put floating-point instructions directly on the 8086 CPU. Therefore, Intel devised a scheme to use a second chip to perform the floating-point calculations—the 8087 floating-point unit (or x87 FPU).7 By the release of the Intel Pentium chip, semiconductor technology had advanced to the point that the FPU was fully integrated onto the x86 CPU. Today, the x86-64 still contains the x87 FPU device, but it has also expanded the floating-point capabilities by using the SSE, SSE2, AVX, and AVX2 instruction sets.

This section describes the x86 FPU instruction set. Later sections (and chapters) discuss the more advanced floating-point capabilities of the SSE through AVX2 instruction sets.

6.5.2 FPU Registers

The x87 FPUs add 14 registers to the x86-64: eight floating-point data registers, a control register, a status register, a tag register, an instruction pointer, a data pointer, and an opcode register. The data registers are similar to the x86-64’s general-purpose register set insofar as all floating-point calculations take place in these registers. The control register contains bits that let you decide how the FPU handles certain degenerate cases like rounding of inaccurate computations; it also contains bits that control precision and so on. The status register is similar to the x86-64’s FLAGS register; it contains the condition code bits and several other floating-point flags that describe the state of the FPU. The tag register contains several groups of bits that determine the state of the value in each of the eight floating-point data registers. The instruction, data pointer, and opcode registers contain certain state information about the last floating-point instruction executed. We do not consider the last four registers here; see the Intel documentation for more details.

6.5.2.1 FPU Data Registers

The FPUs provide eight 80-bit data registers organized as a stack, a significant departure from the organization of the general-purpose registers on the x86-64 CPU. MASM refers to these registers as ST(0), ST(1), . . . ST(7).8

The biggest difference between the FPU register set and the x86-64 register set is the stack organization. On the x86-64 CPU, the AX register is always the AX register, no matter what happens. On the FPU, however, the register set is an eight-element stack of 80-bit floating-point values (Figure 6-2).

Figure 6-2: FPU floating-point register stack

ST(0) refers to the item on the top of stack, ST(1) refers to the next item on the stack, and so on. Many floating-point instructions push and pop items on the stack; therefore, ST(1) will refer to the previous contents of ST(0) after you push something onto the stack. Getting used to the register numbers changing will take some thought and practice, but this is an easy problem to overcome.

6.5.2.2 The FPU Control Register

When Intel designed the 8087 (and, essentially, the IEEE floating-point standard), there were no standards in floating-point hardware. Different (mainframe and mini) computer manufacturers all had different and incompatible floating-point formats. Unfortunately, several applications had been written taking into account the idiosyncrasies of these different floating-point formats.

Intel wanted to design an FPU that could work with the majority of the software out there (keep in mind that the IBM PC was three to four years away when Intel began designing the 8087, so Intel couldn’t rely on that “mountain” of software available for the PC to make its chip popular). Unfortunately, many of the features found in these older floating-point formats were mutually incompatible. For example, in some floating-point systems, rounding would occur when there was insufficient precision; in others, truncation would occur. Some applications would work with one floating-point system but not with the other.

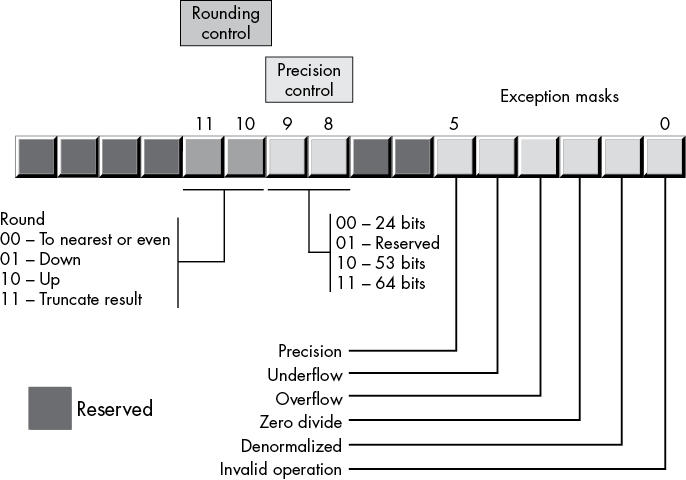

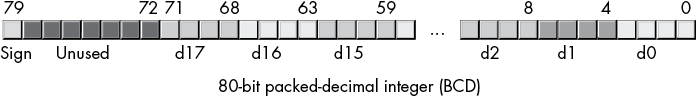

Intel wanted as many applications as possible to work with as few changes as possible on its 8087 FPUs, so it added a special register, the FPU control register, that lets the user choose one of several possible operating modes for the FPU. The 80x87 control register contains 16 bits organized as shown in Figure 6-3.

Figure 6-3: FPU control register

Bits 10 and 11 of the FPU control register provide rounding control according to the values in Table 6-10.

Table 6-10: Rounding Control

| Bits 10 and 11 | Function |

| 00 | To nearest or even |

| 01 | Round down |

| 10 | Round up |

| 11 | Truncate |

The 00 setting is the default. The FPU rounds up values above one-half of the least significant bit. It rounds down values below one-half of the least significant bit. If the value below the least significant bit is exactly one-half of the least significant bit, the FPU rounds the value toward the value whose least significant bit is 0. For long strings of computations, this provides a reasonable, automatic way to maintain maximum precision.

The round-up and round-down options are present for those computations requiring accuracy. By setting the rounding control to round down and performing the operation, then repeating the operation with the rounding control set to round up, you can determine the minimum and maximum ranges between which the true result will fall.

The truncate option forces all computations to truncate any excess bits. You will rarely use this option if accuracy is important. However, you might use this option to help when porting older software to the FPU. This option is also extremely useful when converting a floating-point value to an integer. Because most software expects floating-point–to–integer conversions to truncate the result, you will need to use the truncation/rounding mode to achieve this.

Bits 8 and 9 of the control register specify the precision during computation. This capability is provided to allow compatibility with older software as required by the IEEE 754 standard. The precision-control bits use the values in Table 6-11.

Table 6-11: Mantissa Precision-Control Bits

| Bits 8 and 9 | Precision control |

| 00 | 24 bits |

| 01 | Reserved |

| 10 | 53 bits |

| 11 | 64 bits |

Some CPUs may operate faster with floating-point values whose precision is 53 bits (that is, 64-bit floating-point format) rather than 64 bits (that is, 80-bit floating-point format). See the documentation for your specific processor for details. Generally, the CPU defaults these bits to 11 to select the 64-bit mantissa precision.

Bits 0 to 5 are the exception masks. These are similar to the interrupt enable bit in the x86-64’s FLAGS register. If these bits contain a 1, the corresponding condition is ignored by the FPU. However, if any bit contains 0s, and the corresponding condition occurs, then the FPU immediately generates an interrupt so the program can handle the degenerate condition.

Bit 0 corresponds to an invalid operation error, which generally occurs as the result of a programming error. Situations that raise the invalid operation exception include pushing more than eight items onto the stack or attempting to pop an item off an empty stack, taking the square root of a negative number, or loading a non-empty register.

Bit 1 masks the denormalized interrupt that occurs whenever you try to manipulate denormalized values. Denormalized exceptions occur when you load arbitrary extended-precision values into the FPU or work with very small numbers just beyond the range of the FPU’s capabilities. Normally, you would probably not enable this exception. If you enable this exception and the FPU generates this interrupt, the Windows runtime system raises an exception.

Bit 2 masks the zero-divide exception. If this bit contains 0, the FPU will generate an interrupt if you attempt to divide a nonzero value by 0. If you do not enable the zero-divide exception, the FPU will produce NaN whenever you perform a zero division. It’s probably a good idea to enable this exception by programming a 0 into this bit. Note that if your program generates this interrupt, the Windows runtime system will raise an exception.

Bit 3 masks the overflow exception. The FPU will raise the overflow exception if a calculation overflows or if you attempt to store a value that is too large to fit into the destination operand (for example, storing a large extended-precision value into a single-precision variable). If you enable this exception and the FPU generates this interrupt, the Windows runtime system raises an exception.

Bit 4, if set, masks the underflow exception. Underflow occurs when the result is too small to fit in the destination operand. Like overflow, this exception can occur whenever you store a small extended-precision value into a smaller variable (single or double precision) or when the result of a computation is too small for extended precision. If you enable this exception and the FPU generates this interrupt, the Windows runtime system raises an exception.

Bit 5 controls whether the precision exception can occur. A precision exception occurs whenever the FPU produces an imprecise result, generally the result of an internal rounding operation. Although many operations will produce an exact result, many more will not. For example, dividing 1 by 10 will produce an inexact result. Therefore, this bit is usually 1 because inexact results are common. If you enable this exception and the FPU generates this interrupt, the Windows runtime system raises an exception.

Bits 6 and 7, and 12 to 15, in the control register are currently undefined and reserved for future use (bits 7 and 12 were valid on older FPUs but are no longer used).

The FPU provides two instructions, fldcw (load control word) and fstcw (store control word), that let you load and store the contents of the control register, respectively. The single operand to these instructions must be a 16-bit memory location. The fldcw instruction loads the control register from the specified memory location. fstcw stores the control register into the specified memory location. The syntax for these instructions is shown here:

fldcw mem16

fstcw mem16Here’s some example code that sets the rounding control to truncate result and sets the rounding precision to 24 bits:

.data

fcw16 word ?

.

.

.

fstcw fcw16

mov ax, fcw16

and ax, 0f0ffh ; Clears bits 8-11

or ax, 0c00h ; Rounding control = %11, Precision = %00

mov fcw16, ax

fldcw fcw166.5.2.3 The FPU Status Register

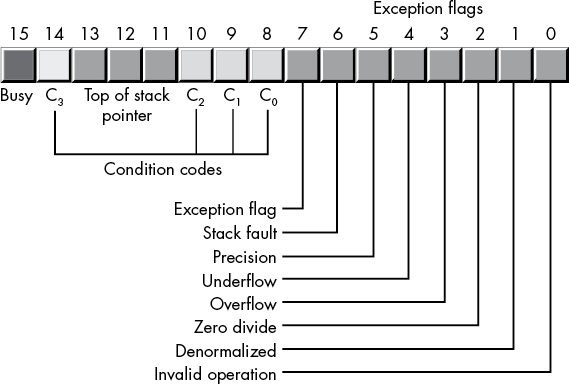

The 16-bit FPU status register provides the status of the FPU at the instant you read it; its layout appears in Figure 6-4. The fstsw instruction stores the 16-bit floating-point status register into a word variable.

Figure 6-4: The FPU status register

Bits 0 through 5 are the exception flags. These bits appear in the same order as the exception masks in the control register. If the corresponding condition exists, the bit is set. These bits are independent of the exception masks in the control register. The FPU sets and clears these bits regardless of the corresponding mask setting.

Bit 6 indicates a stack fault. A stack fault occurs whenever a stack overflow or underflow occurs. When this bit is set, the C1 condition code bit determines whether there was a stack overflow (C1 = 1) or stack underflow (C1 = 0) condition.

Bit 7 of the status register is set if any error condition bit is set. It is the logical or of bits 0 through 5. A program can test this bit to quickly determine if an error condition exists.

Bits 8, 9, 10, and 14 are the coprocessor condition code bits. Various instructions set the condition code bits, as shown in Tables 6-12 and 6-13, respectively.

Table 6-12: FPU Comparison Condition Code Bits (X = “Don’t care”)

| Instruction | Condition code bits | Condition | |||

| C3 | C2 | C1 | C0 | ||

fcomfcompfcomppficomficomp |

0 0 1 1 |

0 0 0 1 |

X X X X |

0 1 0 1 |

ST > sourceST < sourceST = sourceST or source not comparable |

ftst |

0 0 1 1 |

0 0 0 1 |

X X X X |

0 1 0 1 |

ST is positive ST is negative ST is 0 (+ or –) ST is not comparable |

fxam |

0 0 0 0 1 1 1 1 0 0 0 0 1 |

0 0 1 1 0 0 1 1 0 0 1 1 0 |

0 1 0 1 0 1 0 1 0 1 0 1 X |

0 0 0 0 0 0 0 0 1 1 1 1 1 |

Unsupported Unsupported + Normalized – Normalized + 0 – 0 + Denormalized – Denormalized + NaN – NaN + Infinity – Infinity Empty register |

fucomfucompfucompp |

0 0 1 1 |

0 0 0 1 |

X X X X |

0 1 0 1 |

ST > sourceST < sourceST = sourceUnordered/not comparable |

Table 6-13: FPU Condition Code Bits (X = “Don’t care”)

| Instruction | Condition code bits | |||

| C3 | C2 | C1 | C0 | |

fcom, fcomp, fcompp, ftst, fucom, fucomp, fucompp, ficom, ficomp |

Result of comparison, see Table 6-12. | Operands are not comparable. | Set to 0. | Result of comparison, see Table 6-12. |

fxam |

See Table 6-12. | See Table 6-12. | Sign of result, or stack overflow/underflow if stack exception bit is set. | See Table 6-12. |

fprem, fprem1 |

Bit 0 of quotient | 0—reduction done 1—reduction incomplete |

Bit 0 of quotient, or stack overflow/underflow if stack exception bit is set. | Bit 2 of quotient |

fist, fbstp, frndint, fst, fstp, fadd, fmul, fdiv, fdivr, fsub, fsubr, fscale, fsqrt, fpatan, f2xm1, fyl2x, fyl2xp1 |

Undefined | Undefined | Rounding direction if exception; otherwise, set to 0. | Undefined |

fptan, fsin, fcos, fsincos |

Undefined | Set to 1 if within range; otherwise, 0. | Round-up occurred or stack overflow/underflow if stack exception bit is set. Undefined if C2 is set. | Undefined |

fchs, fabs, fxch, fincstp, fdecstp, const loads, fxtract, fld, fild, fbld, fstp (80 bit) |

Undefined | Undefined | Set to 0 or stack overflow/underflow if stack exception bit is set. | Undefined |

fldenv, frstor |

Restored from memory operand | Restored from memory operand | Restored from memory operand | Restored from memory operand |

fldcw, fstenv, fstcw, fstsw, fclex |

Undefined | Undefined | Undefined | Undefined |

finit, fsave |

Cleared to 0 | Cleared to 0 | Cleared to 0 | Cleared to 0 |

Bits 11 to 13 of the FPU status register provide the register number of the top of stack. During computations, the FPU adds (modulo 8) the logical register numbers supplied by the programmer to these 3 bits to determine the physical register number at runtime.

Bit 15 of the status register is the busy bit. It is set whenever the FPU is busy. This bit is a historical artifact from the days when the FPU was a separate chip; most programs will have little reason to access this bit.

6.5.3 FPU Data Types