11

SIMD Instructions

This chapter discusses the vector instructions on the x86-64. This special class of instructions provides parallel processing, traditionally known as single-instruction, multiple-data (SIMD) instructions because, quite literally, a single instruction operates on several pieces of data concurrently. As a result of this concurrency, SIMD instructions can often execute several times faster (in theory, as much as 32 to 64 times faster) than the comparable single-instruction, single-data (SISD), or scalar, instructions that compose the standard x86-64 instruction set.

The x86-64 actually provides three sets of vector instructions: the Multimedia Extensions (MMX) instruction set, the Streaming SIMD Extensions (SSE) instruction set, and the Advanced Vector Extensions (AVX) instruction set. This book does not consider the MMX instructions as they are obsolete (SSE equivalents exist for the MMX instructions).

The x86-64 vector instruction set (SSE/AVX) is almost as large as the scalar instruction set. A whole book could be written about SSE/AVX programming and algorithms. However, this is not that book; SIMD and parallel algorithms are an advanced subject beyond the scope of this book, so this chapter settles for introducing a fair number of SSE/AVX instructions and leaves it at that.

This chapter begins with some prerequisite information. First, it begins with a discussion of the x86-64 vector architecture and streaming data types. Then, it discusses how to detect the presence of various vector instructions (which are not present on all x86-64 CPUs) by using the cpuid instruction. Because most vector instructions require special memory alignment for data operands, this chapter also discusses MASM segments.

11.1 The SSE/AVX Architectures

Let’s begin by taking a quick look at the SSE and AVX features in the x64-86 CPUs. The SSE and AVX instructions have several variants: the original SSE, plus SSE2, SSE3, SSE3, SSE4 (SSE4.1 and SSE4.2), AVX, AVX2 (AVX and AVX2 are sometimes called AVX-256), and AVX-512. SSE3 was introduced along with the Pentium 4F (Prescott) CPU, Intel’s first 64-bit CPU. Therefore, you can assume that all Intel 64-bit CPUs support the SSE3 and earlier SIMD instructions.

The SSE/AVX architectures have three main generations:

- The SSE architecture, which (on 64-bit CPUs) provided sixteen 128-bit XMM registers supporting integer and floating-point data types

- The AVX/AVX2 architecture, which supported sixteen 256-bit YMM registers (also supporting integer and floating-point data types)

- The AVX-512 architecture, which supported up to thirty-two 512-bit ZMM registers

As a general rule, this chapter sticks to AVX2 and earlier instructions in its examples. Please see the Intel and AMD CPU manuals for a discussion of the additional instruction set extensions such as AVX-512. This chapter does not attempt to describe every SSE or AVX instruction. Most streaming instructions have very specialized purposes and aren’t particularly useful in generic applications.

11.2 Streaming Data Types

The SSE and AVX programming models support two basic data types: scalars and vectors. Scalars hold one single- or double-precision floating-point value. Vectors hold multiple floating-point or integer values (between 2 and 32 values, depending on the scalar data type of byte, word, dword, qword, single precision, or double precision, and the register and memory size of 128 or 256 bits).

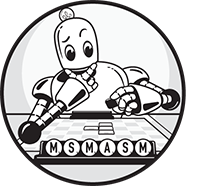

The XMM registers (XMM0 to XMM15) can hold a single 32-bit floating-point value (a scalar) or four single-precision floating-point values (a vector). The YMM registers (YMM0 to YMM15) can hold eight single-precision (32-bit) floating-point values (a vector); see Figure 11-1.

Figure 11-1: Packed and scalar single-precision floating-point data type

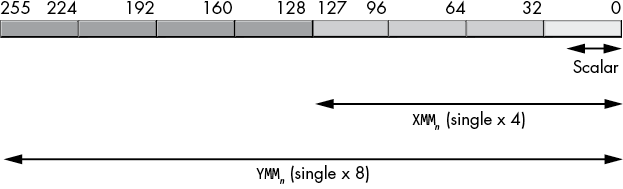

The XMM registers can hold a single double-precision scalar value or a vector containing a pair of double-precision values. The YMM registers can hold a vector containing four double-precision floating-point values, as shown in Figure 11-2.

Figure 11-2: Packed and scalar double-precision floating-point type

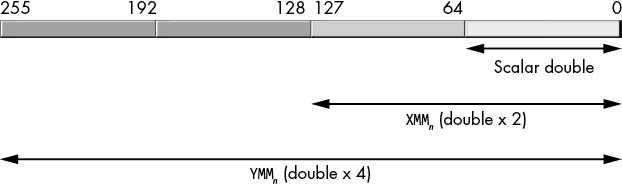

The XMM registers can hold 16 byte values (YMM registers can hold 32 byte values), allowing the CPU to perform 16 (32) byte-sized computations with one instruction (Figure 11-3).

Figure 11-3: Packed byte data type

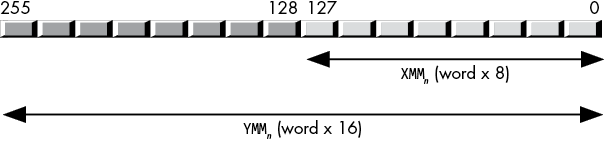

The XMM registers can hold eight word values (YMM registers can hold sixteen word values), allowing the CPU to perform eight (sixteen) 16-bit word-sized integer computations with one instruction (Figure 11-4).

Figure 11-4: Packed word data type

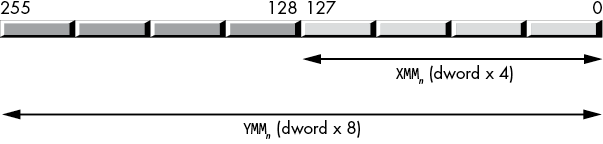

The XMM registers can hold four dword values (YMM registers can hold eight dword values), allowing the CPU to perform four (eight) 32-bit dword-sized integer computations with one instruction (Figure 11-5).

Figure 11-5: Packed double-word data type

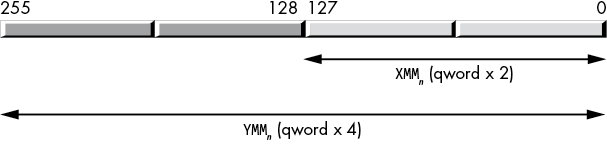

The XMM registers can hold two qword values (YMM registers can hold four qword values), allowing the CPU to perform two (four) 64-bit qword computations with one instruction (Figure 11-6).

Figure 11-6: Packed quad-word data type

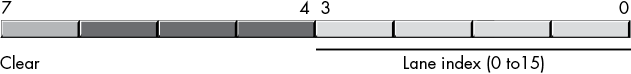

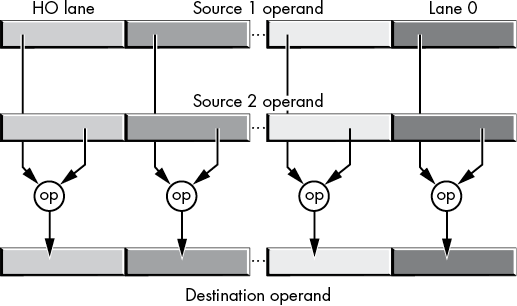

Intel’s documentation calls the vector elements in an XMM and a YMM register lanes. For example, a 128-bit XMM register has 16 bytes. Bits 0 to 7 are lane 0, bits 8 to 15 are lane 1, bits 16 to 23 are lane 2, . . . , and bits 120 to 127 are lane 15. A 256-bit YMM register has 32 byte-sized lanes, and a 512-bit ZMM register has 64 byte-sized lanes.

Similarly, a 128-bit XMM register has eight word-sized lanes (lanes 0 to 7). A 256-bit YMM register has sixteen word-sized lanes (lanes 0 to 15). On AVX-512-capable CPUs, a ZMM register (512 bits) has thirty-two word-sized lanes, numbered 0 to 31.

An XMM register has four dword-sized lanes (lanes 0 to 3); it also has four single-precision (32-bit) floating-point lanes (also numbered 0 to 3). A YMM register has eight dword or single-precision lanes (lanes 0 to 7). An AVX2 ZMM register has sixteen dword or single-precision-sized lanes (numbers 0 to 15).

XMM registers support two qword-sized lanes (or two double-precision lanes), numbered 0 to 1. As expected, a YMM register has twice as many (four lanes, numbered 0 to 3), and an AVX2 ZMM register has four times as many lanes (0 to 7).

Several SSE/AVX instructions refer to various lanes within these registers. In particular, the shuffle and unpack instructions allow you to move data between lanes in SSE and AVX operands. See “The Shuffle and Unpack Instructions” on page 625 for examples of lane usage.

11.3 Using cpuid to Differentiate Instruction Sets

Intel introduced the 8086 (and shortly thereafter, the 8088) microprocessor in 1978. With almost every succeeding CPU generation, Intel added new instructions to the instruction set. Until this chapter, this book has used instructions that are generally available on all x86-64 CPUs (Intel and AMD). This chapter presents instructions that are available only on later-model x86-64 CPUs. To allow programmers to determine which CPU their applications were using so they could dynamically avoid using newer instructions on older processors, Intel introduced the cpuid instruction.

The cpuid instruction expects a single parameter (called a leaf function) passed in the EAX register. It returns various pieces of information about the CPU in different 32-bit registers based on the value passed in EAX. An application can test the return information to see if certain CPU features are available.

As Intel introduced new instructions, it changed the behavior of cpuid to reflect those changes. Specifically, Intel changed the range of values a program could legally pass in EAX to cpuid; this is known as the highest function supported. As a result, some 64-bit CPUs accept only values in the range 0h to 05h. The instructions this chapter discusses may require passing values in the range 0h to 07h. Therefore, the first thing you have to do when using cpuid is to verify that it accepts EAX = 07h as a valid parameter.

To determine the highest function supported, you load EAX with 0 or 8000_0000h and execute the cpuid instruction (all 64-bit CPUs support these two function values). The return value is the maximum you can pass to cpuid in EAX. The Intel and AMD documentation (also see https://en.wikipedia.org/wiki/CPUID) will list the values cpuid returns for various CPUs; for the purposes of this chapter, we need only verify that the highest function supported is 01h (which is true for all 64-bit CPUs) or 07h for certain instructions.

In addition to providing the highest function supported, the cpuid instruction with EAX = 0h (or 8000_0002h) also returns a 12-character vendor ID in the EBX, ECX, and EDX registers. For x86-64 chips, this will be either of the following:

- GenuineIntel (EBX is 756e_6547h, EDX is 4965_6e69h, and ECX is 6c65_746eh)

- AuthenticAMD (EBX is 6874_7541h, EDX is 6974_6E65h, and ECX is 444D_4163h)

To determine if the CPU can execute most SSE and AVX instructions, you must execute cpuid with EAX = 01h and test various bits placed in the ECX register. For a few of the more advanced features (advanced bit-manipulation functions and AVX2 instructions), you’ll need to execute cpuid with EAX = 07h and check the results in the EBX register. The cpuid instruction (with EAX = 1) returns an interesting SSE/AVX feature flag in the following bits in ECX, as shown in Table 11-1; with EAX = 07h, it returns the bit manipulation or AVX2 flag in EBX, as shown in Table 11-2. If the bit is set, the CPU supports the specific instruction(s).

Table 11-1: Intel cpuid Feature Flags (EAX = 1)

| Bit | ECX |

| 0 | SSE3 support |

| 1 | PCLMULQDQ support |

| 9 | SSSE3 support |

| 19 | CPU supports SSE4.1 instructions |

| 20 | CPU supports SSE4.2 instructions |

| 28 | Advanced Vector Extensions |

Table 11-2: Intel cpuid Extended Feature Flags (EAX = 7, ECX = 0)

| Bit | EBX |

| 3 | Bit Manipulation Instruction Set 1 |

| 5 | Advanced Vector Extensions 2 (AVX2) |

| 8 | Bit Manipulation Instruction Set 2 |

Listing 11-1 queries the vendor ID and basic feature flags on a CPU.

; Listing 11-1

; CPUID Demonstration.

option casemap:none

nl = 10

.const

ttlStr byte "Listing 11-1", 0

.data

maxFeature dword ?

VendorID byte 14 dup (0)

.code

externdef printf:proc

; Return program title to C++ program:

public getTitle

getTitle proc

lea rax, ttlStr

ret

getTitle endp

; Used for debugging:

print proc

push rax

push rbx

push rcx

push rdx

push r8

push r9

push r10

push r11

push rbp

mov rbp, rsp

sub rsp, 40

and rsp, -16

mov rcx, [rbp + 72] ; Return address

call printf

mov rcx, [rbp + 72]

dec rcx

skipTo0: inc rcx

cmp byte ptr [rcx], 0

jne skipTo0

inc rcx

mov [rbp + 72], rcx

leave

pop r11

pop r10

pop r9

pop r8

pop rdx

pop rcx

pop rbx

pop rax

ret

print endp

; Here is the "asmMain" function.

public asmMain

asmMain proc

push rbx

push rbp

mov rbp, rsp

sub rsp, 56 ; Shadow storage

xor eax, eax

cpuid

mov maxFeature, eax

mov dword ptr VendorID, ebx

mov dword ptr VendorID[4], edx

mov dword ptr VendorID[8], ecx

lea rdx, VendorID

mov r8d, eax

call print

byte "CPUID(0): Vendor ID='%s', "

byte "max feature=0%xh", nl, 0

; Leaf function 1 is available on all CPUs that support

; CPUID, no need to test for it.

mov eax, 1

cpuid

mov r8d, edx

mov edx, ecx

call print

byte "cpuid(1), ECX=%08x, EDX=%08x", nl, 0

; Most likely, leaf function 7 is supported on all modern CPUs

; (for example, x86-64), but we'll test its availability nonetheless.

cmp maxFeature, 7

jb allDone

mov eax, 7

xor ecx, ecx

cpuid

mov edx, ebx

mov r8d, ecx

call print

byte "cpuid(7), EBX=%08x, ECX=%08x", nl, 0

allDone: leave

pop rbx

ret ; Returns to caller

asmMain endp

endListing 11-1: cpuid demonstration program

On an old MacBook Pro Retina with an Intel i7-3720QM CPU, running under Parallels, you get the following output:

C:\>build listing11-1

C:\>echo off

Assembling: listing11-1.asm

c.cpp

C:\>listing11-1

Calling Listing 11-1:

CPUID(0): Vendor ID='GenuineIntel', max feature=0dh

cpuid(1), ECX=ffba2203, EDX=1f8bfbff

cpuid(7), EBX=00000281, ECX=00000000

Listing 11-1 terminatedThis CPU supports SSE3 instructions (bit 0 of ECX is 1), SSE4.1 and SSE4.2 instructions (bits 19 and 20 of ECX are 1), and the AVX instructions (bit 28 is 1). Those, largely, are the instructions this chapter describes. Most modern CPUs will support these instructions (the i7-3720QM was released by Intel in 2012). The processor doesn’t support some of the more interesting extended features on the Intel instruction set (the extended bit-manipulation instructions and the AVX2 instruction set). Programs using those instructions will not execute on this (ancient) MacBook Pro.

Running this on a more recent CPU (an iMac Pro 10-core Intel Xeon W-2150B) produces the following output:

C:\>listing11-1

Calling Listing 11-1:

CPUID(0): Vendor ID='GenuineIntel', max feature=016h

cpuid(1), ECX=fffa3203, EDX=1f8bfbff

cpuid(7), EBX=d09f47bb, ECX=00000000

Listing 11-1 terminatedAs you can see, looking at the extended feature bits, the newer Xeon CPU does support these additional instructions. The code fragment in Listing 11-2 provides a quick modification to Listing 11-1 that tests for the availability of the BMI1 and BMI2 bit-manipulation instruction sets (insert the following code right before the allDone label in Listing 11-1).

; Test for extended bit manipulation instructions

; (BMI1 and BMI2):

and ebx, 108h ; Test bits 3 and 8

cmp ebx, 108h ; Both must be set

jne Unsupported

call print

byte "CPU supports BMI1 & BMI2", nl, 0

jmp allDone

Unsupported:

call print

byte "CPU does not support BMI1 & BMI2 "

byte "instructions", nl, 0

allDone: leave

pop rbx

ret ; Returns to caller

asmMain endpListing 11-2: Test for BMI1 and BMI2 instruction sets

Here’s the build command and program output on the Intel i7-3720QM CPU:

C:\>build listing11-2

C:\>echo off

Assembling: listing11-2.asm

c.cpp

C:\>listing11-2

Calling Listing 11-2:

CPUID(0): Vendor ID='GenuineIntel', max feature=0dh

cpuid(1), ECX=ffba2203, EDX=1f8bfbff

cpuid(7), EBX=00000281, ECX=00000000

CPU does not support BMI1 & BMI2 instructions

Listing 11-2 terminatedHere’s the same program running on the iMac Pro (Intel Xeon W-2150B):

C:\>listing11-2

Calling Listing 11-2:

CPUID(0): Vendor ID='GenuineIntel', max feature=016h

cpuid(1), ECX=fffa3203, EDX=1f8bfbff

cpuid(7), EBX=d09f47bb, ECX=00000000

CPU supports BMI1 & BMI2

Listing 11-2 terminated11.4 Full-Segment Syntax and Segment Alignment

As you will soon see, SSE and AVX memory data require alignment on 16-, 32-, and even 64-byte boundaries. Although you can use the align directive to align data (see “MASM Support for Data Alignment” in Chapter 3), it doesn’t work beyond 16-byte alignment when using the simplified segment directives presented thus far in this book. If you need alignment beyond 16 bytes, you have to use MASM full-segment declarations.

If you want to create a segment with complete control over segment attributes, you need to use the segment and ends directives.1 The generic syntax for a segment declaration is as follows:

segname segment readonly alignment 'class'

statements

segname endssegname is an identifier. This is the name of the segment (which must also appear before the closing ends directive). It need not be unique; you can have several segment declarations that share the same name. MASM will combine segments with the same name when emitting code to the object file. Avoid the segment names _TEXT, _DATA, _BSS, and _CONST, as MASM uses these names for the .code, .data, .data?, and .const directives, respectively.

The readonly option is either blank or the MASM-reserved word readonly. This is a hint to MASM that the segment will contain read-only (constant) data. If you attempt to (directly) store a value into a variable that you declare in a read-only segment, MASM will complain that you cannot modify a read-only segment.

The alignment option is also optional and allows you to specify one of the following options:

byteworddwordparapagealign(n)(n is a constant that must be a power of 2)

The alignment options tell MASM that the first byte emitted for this particular segment must appear at an address that is a multiple of the alignment option. The byte, word, and dword reserved words specify 1-, 2-, or 4-byte alignments. The para alignment option specifies paragraph alignment (16 bytes). The page alignment option specifies an address alignment of 256 bytes. Finally, the align(n) alignment option lets you specify any address alignment that is a power of 2 (1, 2, 4, 8, 16, 32, and so on).

The default segment alignment, if you don’t explicitly specify one, is paragraph alignment (16 bytes). This is also the default alignment for the simplified segment directives (.code, .data, .data?, and .const).

If you have some (SSE/AVX) data objects that must start at an address that is a multiple of 32 or 64 bytes, then creating a new data segment with 64-byte alignment is what you want. Here’s an example of such a segment:

dseg64 segment align(64)

obj64 oword 0, 1, 2, 3 ; Starts on 64-byte boundary

b byte 0 ; Messes with alignment

align 32 ; Sets alignment to 32 bytes

obj32 oword 0, 1 ; Starts on 32-byte boundary

dseg64 endsThe optional class field is a string (delimited by apostrophes and single quotes) that is typically one of the following names: CODE, DATA, or CONST. Note that MASM and the Microsoft linker will combine segments that have the same class name even if their segment names are different.

This chapter presents examples of these segment declarations as they are needed.

11.5 SSE, AVX, and AVX2 Memory Operand Alignment

SSE and AVX instructions typically allow access to a variety of memory operand sizes. The so-called scalar instructions, which operate on single data elements, can access byte-, word-, dword-, and qword-sized memory operands. In many respects, these types of memory accesses are similar to memory accesses by the non-SIMD instructions. The SSE, AVX, and AVX2 instruction set extensions also access packed or vector operands in memory. Unlike with the scalar memory operands, stringent rules limit the access of packed memory operands. This section discusses those rules.

The SSE instructions can access up to 128 bits of memory (16 bytes) with a single instruction. Most multi-operand SSE instructions can specify an XMM register or a 128-bit memory operand as their source (second) operand. As a general rule, these memory operands must appear on a 16-byte-aligned address in memory (that is, the LO 4 bits of the memory address must contain 0s).

Because segments have a default alignment of para (16 bytes), you can easily ensure that any 16-byte packed data objects are 16-byte-aligned by using the align directive:

align 16MASM will report an error if you attempt to use align 16 in a segment you’ve defined with the byte, word, or dword alignment type. It will work properly with para, page, or any align(n) option where n is greater than or equal to 16.

If you are using AVX instructions to access 256-bit (32-byte) memory operands, you must ensure that those memory operands begin on a 32-byte address boundary. Unfortunately, align 32 won’t work, because the default segment alignment is para (16-byte) alignment, and the segment’s alignment must be greater than or equal to the operand field of any align directives appearing within that segment. Therefore, to be able to define 256-bit variables usable by AVX instructions, you must explicitly define a (data) segment that is aligned on a (minimum) 32-byte boundary, such as the following:

avxData segment align(32)

align 32 ; This is actually redundant here

someData oword 0, 1 ; 256 bits of data

.

.

.

avxData endsThough it’s somewhat redundant to say this, it’s so important it’s worth repeating:

Almost all AVX/AVX2 instructions will generate an alignment fault if you attempt to access a 256-bit object at an address that is not 32-byte-aligned. Always ensure that your AVX packed operands are properly aligned.

If you are using the AVX2 extended instructions with 512-bit memory operands, you must ensure that those operands appear on an address in memory that is a multiple of 64 bytes. As for AVX instructions, you will have to define a segment that has an alignment greater than or equal to 64 bytes, such as this:

avx2Data segment align(64)

someData oword 0, 1, 2, 3 ; 512 bits of data

.

.

.

avx2Data endsForgive the redundancy, but it’s important to remember:

Almost all AVX-512 instructions will generate an alignment fault if you attempt to access a 512-bit object at an address that is not 64-byte-aligned. Always ensure that your AVX-512 packed operands are properly aligned.

If you’re using SSE, AVX, and AVX2 data types in the same application, you can create a single data segment to hold all these data values by using a 64-byte alignment option for the single section, instead of a segment for each data type size. Remember, the segment’s alignment has to be greater than or equal to the alignment required by the specific data type. Therefore, a 64-byte alignment will work fine for SSE and AVX/AVX2 variables, as well as AVX-512 variables:

SIMDData segment align(64)

sseData oword 0 ; 64-byte-aligned is also 16-byte-aligned

align 32 ; Alignment for AVX data

avxData oword 0, 1 ; 32 bytes of data aligned on 32 bytes

align 64

avx2Data oword 0, 1, 2, 3 ; 64 bytes of data

.

.

.

SIMDData endsIf you specify an alignment option that is much larger than you need (such as 256-byte page alignment), you might unnecessarily waste memory.

The align directive works well when your SSE, AVX, and AVX2 data values are static or global variables. What happens when you want to create local variables on the stack or dynamic variables on the heap? Even if your program adheres to the Microsoft ABI, you’re guaranteed only 16-byte alignment on the stack upon entry to your program (or to a procedure). Similarly, depending on your heap management functions, there is no guarantee that a malloc (or similar) function returns an address that is properly aligned for SSE, AVX, or AVX2 data objects.

Inside a procedure, you can allocate storage for a 16-, 32-, or 64-byte-aligned variable by over-allocating the storage, adding the size minus 1 of the object to the allocated address, and then using the and instruction to zero out LO bits of the address (4 bits for 16-byte-aligned objects, 5 bits for 32-byte-aligned objects, and 6 bits for 64-byte-aligned objects). Then you reference the object by using this pointer. The following sample code demonstrates how to do this:

sseproc proc

sseptr equ <[rbp - 8]>

avxptr equ <[rbp - 16]>

avx2ptr equ <[rbp - 24]>

push rbp

mov rbp, rsp

sub rsp, 160

; Load RAX with an address 64 bytes

; above the current stack pointer. A

; 64-byte-aligned address will be somewhere

; between RSP and RSP + 63.

lea rax, [rsp + 63]

; Mask out the LO 6 bits of RAX. This

; generates an address in RAX that is

; aligned on a 64-byte boundary and is

; between RSP and RSP + 63:

and rax, -64 ; 0FFFF...FC0h

; Save this 64-byte-aligned address as

; the pointer to the AVX2 data:

mov avx2ptr, rax

; Add 64 to AVX2's address. This skips

; over AVX2's data. The address is also

; 64-byte-aligned (which means it is

; also 32-byte-aligned). Use this as

; the address of AVX's data:

add rax, 64

mov avxptr, rax

; Add 32 to AVX's address. This skips

; over AVX's data. The address is also

; 32-byte-aligned (which means it is

; also 16-byte-aligned). Use this as

; the address of SSE's data:

add rax, 32

mov sseptr, rax

.

. Code that accesses the

. AVX2, AVX, and SSE data

. areas using avx2ptr,

. avxptr, and sseptr

leave

ret

sseproc endpFor data you allocate on the heap, you do the same thing: allocate extra storage (up to twice as many bytes minus 1), add the size of the object minus 1 (15, 31, or 63) to the address, and then mask the newly formed address with –64, –32, or –16 to produce a 64-, 32-, or 16-byte-aligned object, respectively.

11.6 SIMD Data Movement Instructions

The x86-64 CPUs provide a variety of data move instructions that copy data between (SSE/AVX) registers, load registers from memory, and store register values to memory. The following subsections describe each of these instructions.

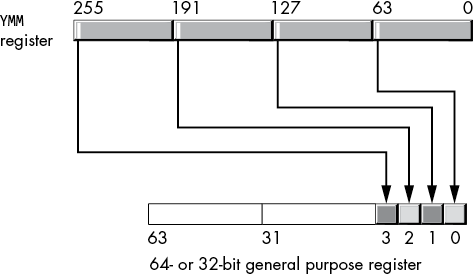

11.6.1 The (v)movd and (v)movq Instructions

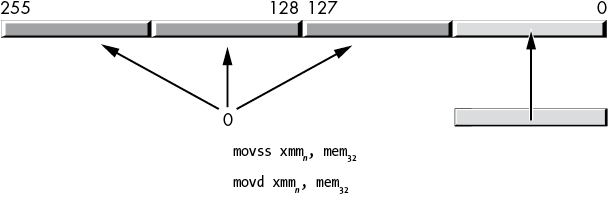

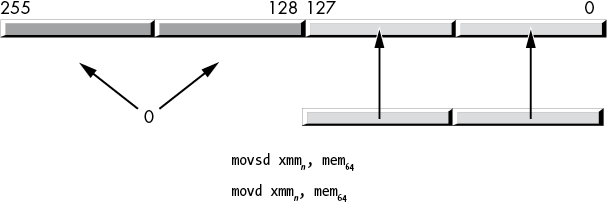

For the SSE instruction set, the movd (move dword) and movq (move qword) instructions copy the value from a 32- or 64-bit general-purpose register or memory location into the LO dword or qword of an XMM register:2

movd xmmn, reg32/mem32

movq xmmn, reg64/mem64These instructions zero-extend the value to remaining HO bits in the XMM register, as shown in Figures 11-7 and 11-8.

Figure 11-7: Moving a 32-bit value from memory to an XMM register (with zero extension)

Figure 11-8: Moving a 64-bit value from memory to an XMM register (with zero extension)

The following instructions store the LO 32 or 64 bits of an XMM register into a dword or qword memory location or general-purpose register:

movd reg32/mem32, xmmn

movq reg64/mem64, xmmnThe movq instruction also allows you to copy data from the LO qword of one XMM register to another, but for whatever reason, the movd instruction does not allow two XMM register operands:

movq xmmn, xmmnFor the AVX instructions, you use the following instructions:3

vmovd xmmn, reg32/mem32

vmovd reg32/mem32, xmmn

vmovq xmmn, reg64/mem64

vmovq reg64/mem64, xmmnThe instructions with the XMM destination operands also zero-extend their values into the HO bits (up to bit 255, unlike the standard SSE instructions that do not modify the upper bits of the YMM registers).

Because the movd and movq instructions access 32- and 64-bit values in memory (rather than 128-, 256-, or 512-bit values), these instructions do not require their memory operands to be 16-, 32-, or 64-byte-aligned. Of course, the instructions may execute faster if their operands are dword (movd) or qword (movq) aligned in memory.

11.6.2 The (v)movaps, (v)movapd, and (v)movdqa Instructions

The movaps (move aligned, packed single), movapd (move aligned, packed double), and movdqa (move double quad-word aligned) instructions move 16 bytes of data between memory and an XMM register or between two XMM registers. The AVX versions (with the v prefix) move 16 or 32 bytes between memory and an XMM or a YMM register or between two XMM or YMM registers (moves involving XMM registers zero out the HO bits of the corresponding YMM register). The memory locations must be aligned on a 16-byte or 32-byte boundary (respectively), or the CPU will generate an unaligned access fault.

All three mov* instructions load 16 bytes into an XMM register and are, in theory, interchangeable. In practice, Intel may optimize the operations for the type of data they move (single-precision floating-point values, double-precision floating-point values, or integer values), so it’s always a good idea to choose the appropriate instruction for the data type you are using (see “Performance Issues and the SIMD Move Instructions” on page 622 for an explanation). Likewise, all three vmov* instructions load 16 or 32 bytes into an XMM or a YMM register and are interchangeable.

These instructions take the following forms:

movaps xmmn, mem128 vmovaps xmmn, mem128 vmovaps ymmn, mem256

movaps mem128, xmmn vmovaps mem128, xmmn vmovaps mem256, ymmn

movaps xmmn, xmmn vmovaps xmmn, xmmn vmovaps ymmn, ymmn

movapd xmmn, mem128 vmovapd xmmn, mem128 vmovapd ymmn, mem256

movapd mem128, xmmn vmovapd mem128, xmmn vmovapd mem256, ymmn

movapd xmmn, xmmn vmovapd xmmn, xmmn vmovapd ymmn, ymmn

movdqa xmmn, mem128 vmovdqa xmmn, mem128 vmovdqa ymmn, mem256

movdqa mem128, xmmn vmovdqa mem128, xmmn vmovdqa mem256, ymmn

movdqa xmmn, xmmn vmovdqa xmmn, xmmn vmovdqa ymmn, ymmnThe mem128 operand should be a vector (array) of four single-precision floating-point values for the (v)movaps instruction; it should be a vector of two double-precision floating-point values for the (v)movapd instruction; it should be a 16-byte value (16 bytes, 8 words, 4 dwords, or 2 qwords) when using the (v)movdqa instruction. If you cannot guarantee that the operands are aligned on a 16-byte boundary, use the movups, movupd, or movdqu instructions, instead (see the next section).

The mem256 operand should be a vector (array) of eight single-precision floating-point values for the vmovaps instruction; it should be a vector of four double-precision floating-point values for the vmovapd instruction; it should be a 32-byte value (32 bytes, 16 words, 8 dwords, or 4 qwords) when using the vmovdqa instruction. If you cannot guarantee that the operands are 32-byte-aligned, use the vmovups, vmovupd, or vmovdqu instructions instead.

Although the physical machine instructions themselves don’t particularly care about the data type of the memory operands, MASM’s assembly syntax certainly does care. You will need to use operand type coercion if the instruction doesn’t match one of the following types:

- The

movapsinstruction allowsreal4,dword, andowordoperands. - The

movapdinstruction allowsreal8,qword, andowordoperands. - The

movdqainstruction allows onlyowordoperands. - The

vmovapsinstruction allowsreal4,dword, andymmword ptroperands (when using a YMM register). - The

vmovapdinstruction allowsreal8,qword, andymmword ptroperands (when using a YMM register). - The

vmovdqainstruction allows onlyymmword ptroperands (when using a YMM register).

Often you will see memcpy (memory copy) functions use the (v)movapd instructions for very high-performance operations. See Agner Fog’s website at https://www.agner.org/optimize/ for more details.

11.6.3 The (v)movups, (v)movupd, and (v)movdqu Instructions

When you cannot guarantee that packed data memory operands lie on a 16- or 32-byte address boundary, you can use the (v)movups (move unaligned packed single-precision), (v)movupd (move unaligned packed double-precision), and (v)movdqu (move double quad-word unaligned) instructions to move data between XMM or YMM registers and memory.

As for the aligned moves, all the unaligned moves do the same thing: copying 16 (32) bytes of data to and from memory. The convention for the various data types is the same as it is for the aligned data movement instructions.

11.6.4 Performance of Aligned and Unaligned Moves

Listings 11-3 and 11-4 provide sample programs that demonstrate the performance of the mova* and movu* instructions using aligned and unaligned memory accesses.

; Listing 11-3

; Performance test for packed versus unpacked

; instructions. This program times aligned accesses.

option casemap:none

nl = 10

.const

ttlStr byte "Listing 11-3", 0

dseg segment align(64) 'DATA'

; Aligned data types:

align 64

alignedData byte 64 dup (0)

dseg ends

.code

externdef printf:proc

; Return program title to C++ program:

public getTitle

getTitle proc

lea rax, ttlStr

ret

getTitle endp

; Used for debugging:

print proc

; Print code removed for brevity.

; See Listing 11-1 for actual code.

print endp

; Here is the "asmMain" function.

public asmMain

asmMain proc

push rbx

push rbp

mov rbp, rsp

sub rsp, 56 ; Shadow storage

call print

byte "Starting", nl, 0

mov rcx, 4000000000 ; 4,000,000,000

lea rdx, alignedData

mov rbx, 0

rptLp: mov rax, 15

rptLp2: movaps xmm0, xmmword ptr [rdx + rbx * 1]

movapd xmm0, real8 ptr [rdx + rbx * 1]

movdqa xmm0, xmmword ptr [rdx + rbx * 1]

vmovaps ymm0, ymmword ptr [rdx + rbx * 1]

vmovapd ymm0, ymmword ptr [rdx + rbx * 1]

vmovdqa ymm0, ymmword ptr [rdx + rbx * 1]

vmovaps zmm0, zmmword ptr [rdx + rbx * 1]

vmovapd zmm0, zmmword ptr [rdx + rbx * 1]

dec rax

jns rptLp2

dec rcx

jnz rptLp

call print

byte "Done", nl, 0

allDone: leave

pop rbx

ret ; Returns to caller

asmMain endp

endListing 11-3: Aligned memory-access timing code

; Listing 11-4

; Performance test for packed versus unpacked

; instructions. This program times unaligned accesses.

option casemap:none

nl = 10

.const

ttlStr byte "Listing 11-4", 0

dseg segment align(64) 'DATA'

; Aligned data types:

align 64

alignedData byte 64 dup (0)

dseg ends

.code

externdef printf:proc

; Return program title to C++ program:

public getTitle

getTitle proc

lea rax, ttlStr

ret

getTitle endp

; Used for debugging:

print proc

; Print code removed for brevity.

; See Listing 11-1 for actual code.

print endp

; Here is the "asmMain" function.

public asmMain

asmMain proc

push rbx

push rbp

mov rbp, rsp

sub rsp, 56 ; Shadow storage

call print

byte "Starting", nl, 0

mov rcx, 4000000000 ; 4,000,000,000

lea rdx, alignedData

rptLp: mov rbx, 15

rptLp2:

movups xmm0, xmmword ptr [rdx + rbx * 1]

movupd xmm0, real8 ptr [rdx + rbx * 1]

movdqu xmm0, xmmword ptr [rdx + rbx * 1]

vmovups ymm0, ymmword ptr [rdx + rbx * 1]

vmovupd ymm0, ymmword ptr [rdx + rbx * 1]

vmovdqu ymm0, ymmword ptr [rdx + rbx * 1]

vmovups zmm0, zmmword ptr [rdx + rbx * 1]

vmovupd zmm0, zmmword ptr [rdx + rbx * 1]

dec rbx

jns rptLp2

dec rcx

jnz rptLp

call print

byte "Done", nl, 0

allDone: leave

pop rbx

ret ; Returns to caller

asmMain endp

endListing 11-4: Unaligned memory-access timing code

The code in Listing 11-3 took about 1 minute and 7 seconds to execute on a 3GHz Xeon W CPU. The code in Listing 11-4 took 1 minute and 55 seconds to execute on the same processor. As you can see, there is sometimes an advantage to accessing SIMD data on an aligned address boundary.

11.6.5 The (v)movlps and (v)movlpd Instructions

The (v)movl* instructions and (v)movh* instructions (from the next section) might look like normal move instructions. Their behavior is similar to many other SSE/AVX move instructions. However, they were designed to support packing and unpacking floating-point vectors. Specifically, these instructions allow you to merge two pairs of single-precision or a pair of double-precision floating-point operands from two different sources into a single XMM register.

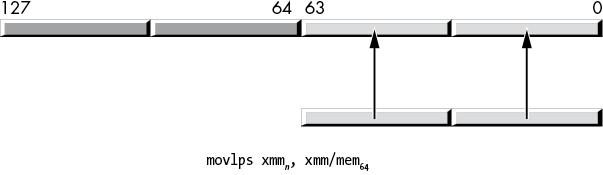

The (v)movlps instructions use the following syntax:

movlps xmmdest, mem64

movlps mem64, xmmsrc

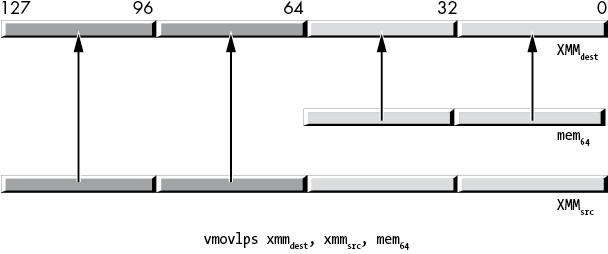

vmovlps xmmdest, xmmsrc, mem64

vmovlps mem64, xmmsrcThe movlps xmmdest, mem64 form copies a pair of single-precision floating-point values into the two LO 32-bit lanes of a destination XMM register, as shown in Figure 11-9. This instruction leaves the HO 64 bits unchanged.

Figure 11-9: movlps instruction

The movlps mem64, xmmsrc form copies the LO 64 bits (the two LO single-precision lanes) from the XMM source register to the specified memory location. Functionally, this is equivalent to the movq or movsd instructions (as it copies 64 bits to memory), though this instruction might be slightly faster if the LO 64 bits of the XMM register actually contain two single-precision values (see “Performance Issues and the SIMD Move Instructions” on page 622 for an explanation).

The vmovlps instruction has three operands: a destination XMM register, a source XMM register, and a source (64-bit) memory location. This instruction copies the two single-precision values from the memory location into the LO 64 bits of the destination XMM register. It copies the HO 64 bits of the source register (which also hold two single-precision values) into the HO 64 bits of the destination register. Figure 11-10 shows the operation. Note that this instruction merges the pair of operands with a single instruction.

Figure 11-10: vmovlps instruction

Like movsd, the movlpd (move low packed double) instruction copies the LO 64 bits (a double-precision floating-point value) of the source operand to the LO 64 bits of the destination operand. The difference is that the movlpd instruction doesn’t zero-extend the value when moving data from memory into an XMM register, whereas the movsd instruction will zero-extend the value into the upper 64 bits of the destination XMM register. (Neither the movsd nor movlpd will zero-extend when copying data between XMM registers; of course, zero extension doesn’t apply when storing data to memory.)4

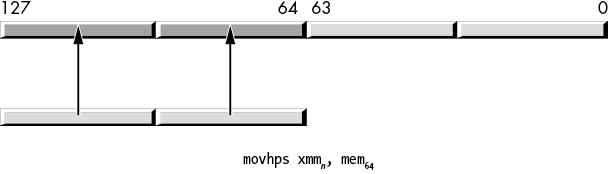

11.6.6 The movhps and movhpd Instructions

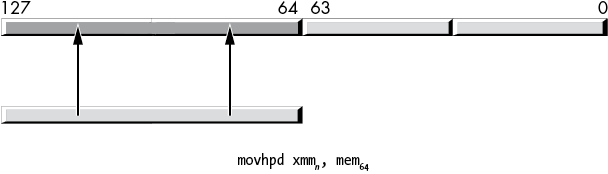

The movhps and movhpd instructions move a 64-bit value (either two single-precision floats in the case of movhps, or a single double-precision value in the case of movhpd) into the HO quad word of a destination XMM register. Figure 11-11 shows the operation of the movhps instruction; Figure 11-12 shows the movhpd instruction.

Figure 11-11: movhps instruction

Figure 11-12: movhpd instruction

The movhps and movhpd instructions can also store the HO quad word of an XMM register into memory. The allowable syntax is shown here:

movhps xmmn, mem64

movhps mem64, xmmn

movhpd xmmn, mem64

movhpd mem64, xmmnThese instructions do not affect bits 128 to 255 of the YMM registers (if present on the CPU).

You would normally use a movlps instruction followed by a movhps instruction to load four single-precision floating-point values into an XMM register, taking the floating-point values from two different data sources (similarly, you could use the movlpd and movhpd instructions to load a pair of double-precision values into a single XMM register from different sources). Conversely, you could also use this instruction to split a vector result in half and store the two halves in different data streams. This is probably the intended purpose of this instruction. Of course, if you can use it for other purposes, have at it.

MASM (version 14.15.26730.0, at least) seems to require movhps operands to be a 64-bit data type and does not allow real4 operands.5 Therefore, you may have to explicitly coerce an array of two real4 values with qword ptr when using this instruction:

r4m real4 1.0, 2.0, 3.0, 4.0

r8m real8 1.0, 2.0

.

.

.

movhps xmm0, qword ptr r4m2

movhpd xmm0, r8m11.6.7 The vmovhps and vmovhpd Instructions

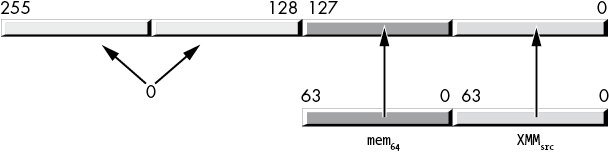

Although the AVX instruction extensions provide vmovhps and vmovhpd instructions, they are not a simple extension of the SSE movhps and movhpd instructions. The syntax for these instructions is as follows:

vmovhps xmmdest, xmmsrc, mem64

vmovhps mem64, xmmsrc

vmovhpd xmmdest, xmmsrc, mem64

vmovhpd mem64, xmmsrcThe instructions that store data into a 64-bit memory location behave similarly to the movhps and movhpd instructions. The instructions that load data into an XMM register have two source operands. They load a full 128 bits (four single-precision values or two double-precision values) into the destination XMM register. The HO 64 bits come from the memory operand; the LO 64 bits come from the LO quad word of the source XMM register, as Figure 11-13 shows. These instructions also zero-extend the value into the upper 128 bits of the (overlaid) YMM register.

Figure 11-13: vmovhpd and vmovhps instructions

Unlike for the movhps instruction, MASM properly accepts real4 source operands for the vmovhps instruction:

r4m real4 1.0, 2.0, 3.0, 4.0

r8m real8 1.0, 2.0

.

.

.

vmovhps xmm0, xmm1, r4m

vmovhpd xmm0, xmm1, r8m11.6.8 The movlhps and vmovlhps Instructions

The movlhps instruction moves a pair of 32-bit single-precision floating-point values from the LO qword of the source XMM register into the HO 64 bits of a destination XMM register. It leaves the LO 64 bits of the destination register unchanged. If the destination register is on a CPU that supports 256-bit AVX registers, this instruction also leaves the HO 128 bits of the overlaid YMM register unchanged.

The syntax for these instructions is as follows:

movlhps xmmdest, xmmsrc

vmovlhps xmmdest, xmmsrc1, xmmsrc2You cannot use this instruction to move data between memory and an XMM register; it transfers data only between XMM registers. No double-precision version of this instruction exists.

The vmovlhps instruction is similar to movlhps, with the following differences:

vmovlhpsrequires three operands: two source XMM registers and a destination XMM register.vmovlhpscopies the LO quad word of the first source register into the LO quad word of the destination register.vmovlhpscopies the LO quad word of the second source register into bits 64 to 127 of the destination register.vmovlhpszero-extends the result into the upper 128 bits of the overlaid YMM register.

There is no vmovlhpd instruction.

11.6.9 The movhlps and vmovhlps Instructions

The movhlps instruction has the following syntax:

movhlps xmmdest, xmmsrcThe movhlps instruction copies the pair of 32-bit single-precision floating-point values from the HO qword of the source operand to the LO qword of the destination register, leaving the HO 64 bits of the destination register unchanged (this is the converse of movlhps). This instruction copies data only between XMM registers; it does not allow a memory operand.

The vmovhlps instruction requires three XMM register operands; here is its syntax:

vmovhlps xmmdest, xmmsrc1, xmmsrc2This instruction copies the HO 64 bits of the first source register into the HO 64 bits of the destination register, copies the HO 64 bits of the second source register into bits 0 to 63 of the destination register, and finally, zero-extends the result into the upper bits of the overlaid YMM register.

There are no movhlpd or vmovhlpd instructions.

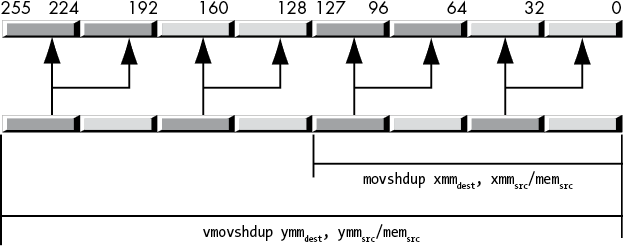

11.6.10 The (v)movshdup and (v)movsldup Instructions

The movshdup instruction moves the two odd-index single-precision floating-point values from the source operand (memory or XMM register) and duplicates each element into the destination XMM register, as shown in Figure 11-14.

Figure 11-14: movshdup and vmovshdup instructions

This instruction ignores the single-precision floating-point values at even-lane indexes into the XMM register. The vmovshdup instruction works the same way but on YMM registers, copying four single-precision values rather than two (and, of course, zeroing the HO bits). The syntax for these instructions is shown here:

movshdup xmmdest, mem128/xmmsrc

vmovshdup xmmdest, mem128/xmmsrc

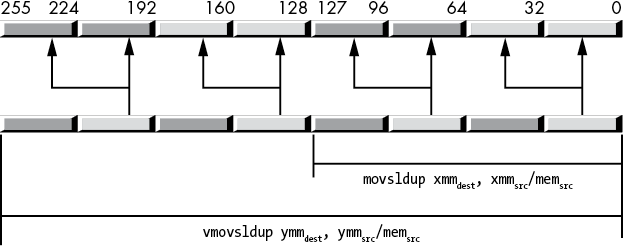

vmovshdup ymmdest, mem256/ymmsrcThe movsldup instruction works just like the movshdup instruction, except it copies and duplicates the two single-precision values at even indexes in the source XMM register to the destination XMM register. Likewise, the vmovsldup instruction copies and duplicates the four double-precision values in the source YMM register at even indexes, as shown in Figure 11-15.

Figure 11-15: movsldup and vmovsldup instructions

The syntax is as follows:

movsldup xmmdest, mem128/xmmsrc

vmovsldup xmmdest, mem128/xmmsrc

vmovsldup ymmdest, mem256/ymmsrc11.6.11 The (v)movddup Instruction

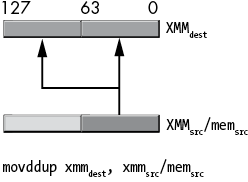

The movddup instruction copies and duplicates a double-precision value from the LO 64 bits of an XMM register or a 64-bit memory location into the LO 64 bits of a destination XMM register; then it also duplicates this value into bits 64 to 127 of that same destination register, as shown in Figure 11-16.

Figure 11-16: movddup instruction behavior

This instruction does not disturb the HO 128 bits of a YMM register (if applicable). The syntax for this instruction is as follows:

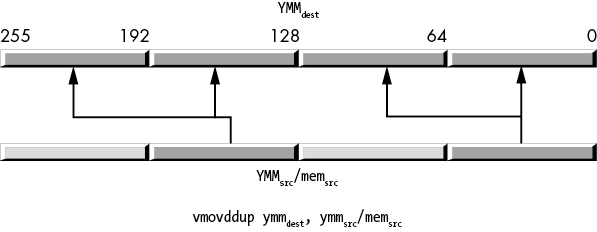

movddup xmmdest, mem64/xmmsrcThe vmovddup instruction operates on an XMM or a YMM destination register and an XMM or a YMM source register or 128- or 256-bit memory location. The 128-bit version works just like the movddup instruction except it zeroes the HO bits of the destination YMM register. The 256-bit version copies a pair of double-precision values at even indexes (0 and 2) in the source value to their corresponding indexes in the destination YMM register and duplicates those values at the odd indexes in the destination, as Figure 11-17 shows.

Figure 11-17: vmovddup instruction behavior

Here is the syntax for this instruction:

movddup xmmdest, mem64/xmmsrc

vmovddup ymmdest, mem256/ymmsrc11.6.12 The (v)lddqu Instruction

The (v)lddqu instruction is operationally identical to (v)movdqu. You can sometimes use this instruction to improve performance if the (memory) source operand is not aligned properly and crosses a cache line boundary in memory. For more details on this instruction and its performance limitations, refer to the Intel or AMD documentation (specifically, the optimization manuals).

These instructions always take the following form:

lddqu xmmdest, mem128

vlddqu xmmdest, mem128

vlddqu ymmdest, mem25611.6.13 Performance Issues and the SIMD Move Instructions

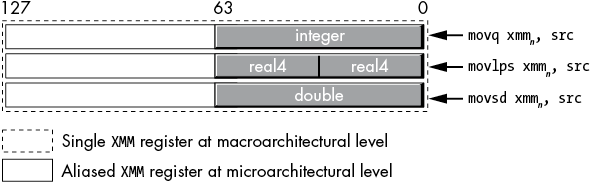

When you look at the SSE/AVX instructions’ semantics at the programming model level, you might question why certain instructions appear in the instruction set. For example, the movq, movsd, and movlps instructions can all load 64 bits from a memory location into the LO 64 bits of an XMM register. Why bother doing this? Why not have a single instruction that copies the 64 bits from a quad word in memory to the LO 64 bits of an XMM register (be it a 64-bit integer, a pair of 32-bit integers, a 64-bit double-precision floating-point value, or a pair of 32-bit single-precision floating-point values)? The answer lies in the term microarchitecture.

The x86-64 macroarchitecture is the programming model that a software engineer sees. In the macroarchitecture, an XMM register is a 128-bit resource that, at any given time, could hold a 128-bit array of bits (or an integer), a pair of 64-bit integer values, a pair of 64-bit double-precision floating-point values, a set of four single-precision floating-point values, a set of four double-word integers, eight words, or 16 bytes. All these data types overlay one another, just like the 8-, 16-, 32-, and 64-bit general-purpose registers overlay one another (this is known as aliasing). If you load two double-precision floating-point values into an XMM register and then modify the (integer) word at bit positions 0 to 15, you’re also changing those same bits (0 to 15) in the double-precision value in the LO qword of the XMM register. The semantics of the x86-64 programming model require this.

At the microarchitectural level, however, there is no requirement that the CPU use the same physical bits in the CPU for integer, single-precision, and double-precision values (even when they are aliased to the same register). The microarchitecture could set aside a separate set of bits to hold integers, single-precision, and double-precision values for a single register. So, for example, when you use the movq instruction to load 64 bits into an XMM register, that instruction might actually copy the bits into the underlying integer register (without affecting the single-precision or double-precision subregisters). Likewise, movlps would copy a pair of single-precision values into the single-precision register, and movsd would copy a double-precision value into the double-precision register (Figure 11-18). These separate subregisters (integer, single-precision, and double-precision) could be connected directly to the arithmetic or logical unit that handles their specific data types, making arithmetic and logical operations on those subregisters more efficient. As long as the data is sitting in the appropriate subregister, everything works smoothly.

Figure 11-18: Register aliasing at the microarchitectural level

However, what happens if you use movq to load a pair of single-precision floating-point values into an XMM register and then try to perform a single-precision vector operation on those two values? At the macroarchitectural level, the two single-precision values are sitting in the appropriate bit positions of the XMM register, so this has to be a legal operation. At the microarchitectural level, however, those two single-precision floating-point values are sitting in the integer subregister, not the single-precision subregister. The underlying microarchitecture has to note that the values are in the wrong subregister and move them to the appropriate (single-precision) subregister before performing the single-precision arithmetic or logical operation. This may introduce a slight delay (while the microarchitecture moves the data around), which is why you should always pick the appropriate move instructions for your data types.

11.6.14 Some Final Comments on the SIMD Move Instructions

The SIMD data movement instructions are a confusing bunch. Their syntax is inconsistent, many instructions duplicate the actions of other instructions, and they have some perplexing irregularity issues. Someone new to the x86-64 instruction set might ask, “Why was the instruction set designed this way?” Why, indeed?

The answer to that question is historical. The SIMD instructions did not exist on the earliest x86 CPUs. Intel added the MMX instruction set to the Pentium-series CPUs. At that time (the early 1990s), current technology allowed Intel to add only a few additional instructions, and the MMX registers were limited to 64 bits in size. Furthermore, software engineers and computer systems designers were only beginning to explore the multimedia capabilities of modern computers, so it wasn’t entirely clear which instructions (and data types) were necessary to support the type of software we see several decades later. As a result, the earliest SIMD instructions and data types were limited in scope.

As time passed, CPUs gained additional silicon resources, and software/systems engineers discovered new uses for computers (and new algorithms to run on those computers), so Intel (and AMD) responded by adding new SIMD instructions to support these more modern multimedia applications. The original MMX instructions, for example, supported only integer data types, so Intel added floating-point support in the SSE instruction set, because multimedia applications needed real data types. Then Intel extended the integer types from 64 bits to 128, 256, and even 512 bits. With each extension, Intel (and AMD) had to retain the older instruction set extensions in order to allow preexisting software to run on the new CPUs.

As a result, the newer instruction sets kept piling on new instructions that did the same work as the older ones (with some additional capabilities). This is why instructions like movaps and vmovaps have considerable overlap in their functionality. If the CPU resources had been available earlier (for example, to put 256-bit YMM registers on the CPU), there would have been almost no need for the movaps instruction—the vmovaps could have done all the work.6

In theory, we could create an architecturally elegant variant of the x86-64 by starting over from scratch and designing a minimal instruction set that handles all the activities of the current x86-64 without all the kruft and kludges present in the existing instruction set. However, such a CPU would lose the primary advantage of the x86-64: the ability to run decades of software written for the Intel architecture. The cost of being able to run all this old software is that assembly language programmers (and compiler writers) have to deal with all these irregularities in the instruction set.

11.7 The Shuffle and Unpack Instructions

The SSE/AVX shuffle and unpack instructions are variants of the move instructions. In addition to moving data around, these instructions can also rearrange the data appearing in different lanes of the XMM and YMM registers.

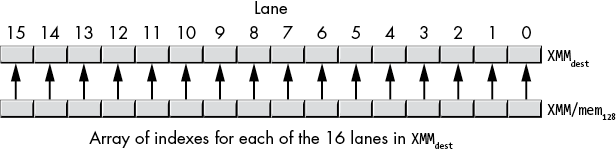

11.7.1 The (v)pshufb Instructions

The pshufb instruction was the first packed byte shuffle SIMD instruction (it first appeared with the MMX instruction set). Because of its origin, its syntax and behavior are a bit different from the other shuffle instructions in the instruction set. The syntax is the following:

pshufb xmmdest, xmm/mem128The first (destination) operand is an XMM register whose byte lanes pshufb will shuffle (rearrange). The second operand (either an XMM register or a 128-bit oword memory location) is an array of 16 byte values holding indexes that control the shuffle operation. If the second operand is a memory location, that oword value must be aligned on a 16-byte boundary.

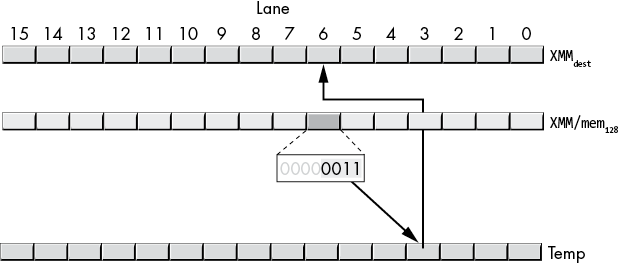

Each byte (lane) in the second operand selects a value for the corresponding byte lane in the first operand, as shown in Figure 11-19.

Figure 11-19: Lane index correspondence for pshufb instruction

The 16-byte indexes in the second operand each take the form shown in Figure 11-20.

Figure 11-20: phsufb byte index

The pshufb instruction ignores bits 4 to 6 in an index byte. Bit 7 is the clear bit; if this bit contains a 1, the pshufb instruction ignores the lane index bits and stores a 0 into the corresponding byte in XMMdest. If the clear bit contains a 0, the pshufb instruction does a shuffle operation.

The pshufb shuffle operation takes place on a lane-by-lane basis. The instruction first makes a temporary copy of XMMdest. Then for each index byte (whose HO bit is 0), the pshufb copies the lane specified by the LO 4 bits of the index from the XMMdest lane that matches the index’s lane, as shown in Figure 11-21. In this example, the index appearing in lane 6 contains the value 00000011b. This selects the value in lane 3 of the temporary (original XMMdest) value and copies it to lane 6 of XMMdest. The pshufb instruction repeats this operation for all l6 lanes.

Figure 11-21: Shuffle operation

The AVX instruction set extensions introduced the vpshufb instruction. Its syntax is the following:

vpshufb xmmdest, xmmsrc, xmmindex/mem128

vpshufb ymmdest, ymmsrc, ymmindex/mem256The AVX variant adds a source register (rather than using XMMdest as both the source and destination registers), and, rather than creating a temporary copy of XMMdest prior to the operation and picking the values from that copy, the vpshufb instructions select the source bytes from the XMMsrc register. Other than that, and the fact that these instructions zero the HO bits of YMMdest, the 128-bit variant operates identically to the SSE pshufb instruction.

The AVX instruction allows you to specify 256-bit YMM registers in addition to 128-bit XMM registers.7

11.7.2 The (v)pshufd Instructions

The SSE extensions first introduced the pshufd instruction. The AVX extensions added the vpshufd instruction. These instructions shuffle dwords in XMM and YMM registers (not double-precision values) similarly to the (v)pshufb instructions. However, the shuffle index is specified differently from (v)pshufb. The syntax for the (v)pshufd instructions is as follows:

pshufd xmmdest, xmmsrc/mem128, imm8

vpshufd xmmdest, xmmsrc/mem128, imm8

vpshufd ymmdest, ymmsrc/mem256, imm8The first operand (XMMdest or YMMdest) is the destination operand where the shuffled values will be stored. The second operand is the source from which the instruction will select the double words to place in the destination register; as usual, if this is a memory operand, you must align it on the appropriate (16- or 32-byte) boundary. The third operand is an 8-bit immediate value that specifies the indexes for the double words to select from the source operand.

For the (v)pshufd instructions with an XMMdest operand, the imm8 operand has the encoding shown in Table 11-3. The value in bits 0 to 1 selects a particular dword from the source operand to place in dword 0 of the XMMdest operand. The value in bits 2 to 3 selects a dword from the source operand to place in dword 1 of the XMMdest operand. The value in bits 4 to 5 selects a dword from the source operand to place in dword 2 of the XMMdest operand. Finally, the value in bits 6 to 7 selects a dword from the source operand to place in dword 3 of the XMMdest operand.

Table 11-3: (v)pshufd imm8 Operand Values

| Bit positions | Destination lane |

| 0 to 1 | 0 |

| 2 to 3 | 1 |

| 4 to 5 | 2 |

| 6 to 7 | 3 |

The difference between the 128-bit pshufd and vpshufd instructions is that pshufd leaves the HO 128 bits of the underlying YMM register unchanged and vpshufd zeroes the HO 128 bits of the underlying YMM register.

The 256-bit variant of vpshufd (when using YMM registers as the source and destination operands) still uses an 8-bit immediate operand as the index value. Each 2-bit index value manipulates two dword values in the YMM registers. Bits 0 to 1 control dwords 0 and 4, bits 2 to 3 control dwords 1 and 5, bits 4 to 5 control dwords 2 and 6, and bits 6 to 7 control dwords 3 and 7, as shown in Table 11-4.

Table 11-4: Double-Word Transfers for vpshufd YMMdest, YMMsrc/memsrc, imm8

| Index | YMM/memsrc [index] copied into | YMM/memsrc [index + 4] copied into |

| Bits 0 to 1 of imm8 | YMMdest[0] | YMMdest[4] |

| Bits 2 to 3 of imm8 | YMMdest[1] | YMMdest[5] |

| Bits 4 to 5 of imm8 | YMMdest[2] | YMMdest[6] |

| Bits 6 to 7 of imm8 | YMMdest[3] | YMMdest[7] |

The 256-bit version is slightly less flexible as it copies two dwords at a time, rather than one. It processes the LO 128 bits exactly the same way as the 128-bit version of the instruction; it also copies the corresponding lanes in the upper 128 bits of the source to the YMM destination register by using the same shuffle pattern. Unfortunately, you can’t independently control the HO and LO halves of the YMM register by using the vpshufd instruction. If you really need to shuffle dwords independently, you can use vshufb with appropriate indexes that copy 4 bytes (in place of a single dword).

11.7.3 The (v)pshuflw and (v)pshufhw Instructions

The pshuflw and vpshuflw and the pshufhw and vpshufhw instructions provide support for 16-bit word shuffles within an XMM or a YMM register. The syntax for these instructions is the following:

pshuflw xmmdest, xmmsrc/mem128, imm8

pshufhw xmmdest, xmmsrc/mem128, imm8

vpshuflw xmmdest, xmmsrc/mem128, imm8

vpshufhw xmmdest, xmmsrc/mem128, imm8

vpshuflw ymmdest, ymmsrc/mem256, imm8

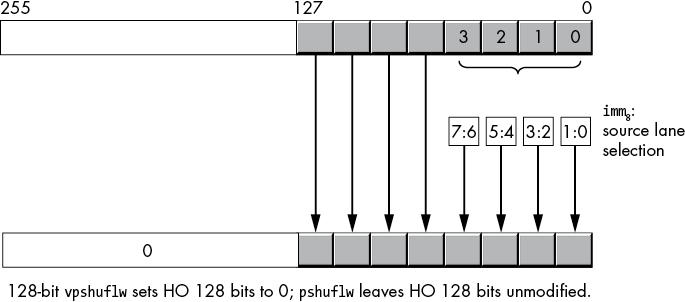

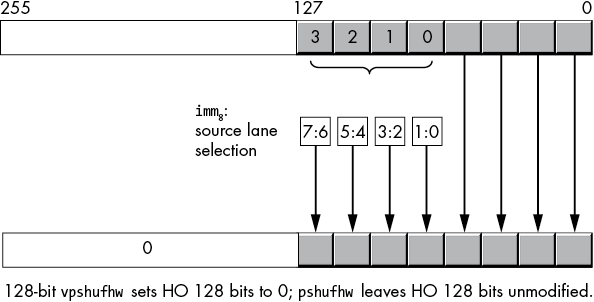

vpshufhw ymmdest, ymmsrc/mem256, imm8The 128-bit lw variants copy the HO 64 bits of the source operand to the same positions in the XMMdest operand. Then they use the index (imm8) operand to select word lanes 0 to 3 in the LO qword of the XMMsrc/mem128 operand to move to the LO 4 lanes of the destination operand. For example, if the LO 2 bits of imm8 are 10b, then the pshuflw instruction copies lane 2 from the source into lane 0 of the destination operand (Figure 11-22). Note that pshuflw does not modify the HO 128 bits of the overlaid YMM register, whereas vpshuflw zeroes those HO bits.

Figure 11-22: (v)pshuflw xmm, xmm/mem, imm8 operation

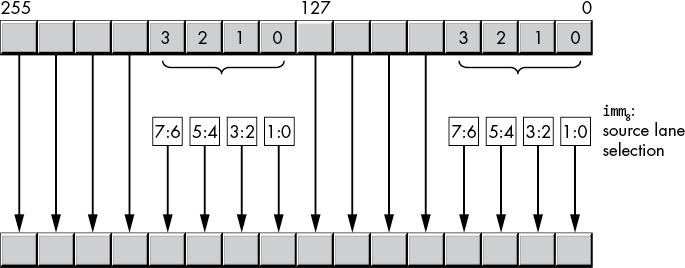

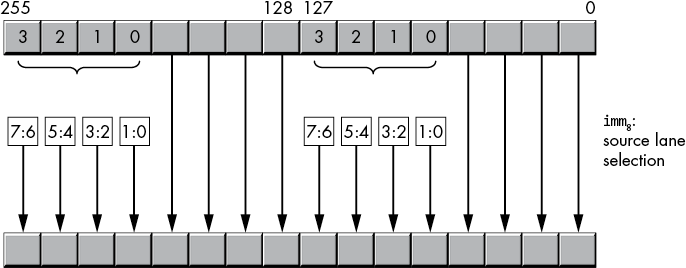

The 256-bit vpshuflw instruction (with a YMM destination register) copies two pairs of words at a time—one pair in the HO 128 bits and one pair in the LO 128 bits of the YMM destination register and 256-bit source locations, as shown in Figure 11-23. The index (imm8) selection is the same for the LO and HO 128 bits.

Figure 11-23: vpshuflw ymm, ymm/mem, imm8 operation

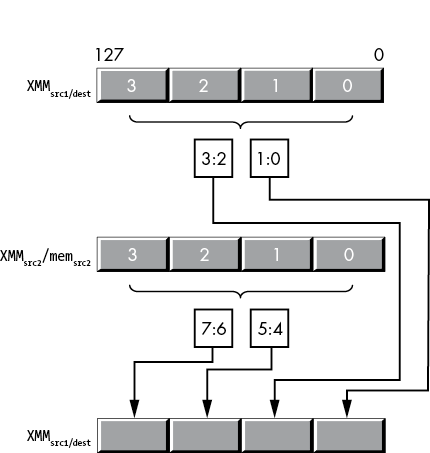

The 128-bit hw variants copy the LO 64 bits of the source operand to the same positions in the destination operand. Then they use the index operand to select words 4 to 7 (indexed as 0 to 3) in the 128-bit source operand to move to the HO four word lanes of the destination operand (Figure 11-24).

Figure 11-24: (v)pshufhw operation

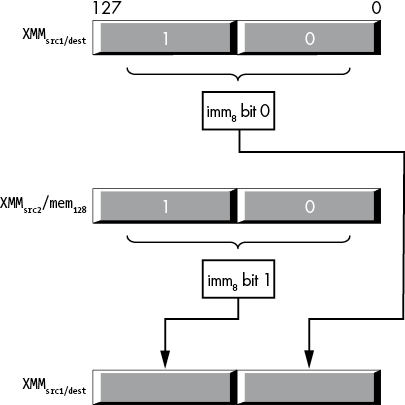

The 256-bit vpshufhw instruction (with a YMM destination register) copies two pairs of words at a time—one in the HO 128 bits and one in the LO 128 bits of the YMM destination register and 256-bit source locations, as shown in Figure 11-25.

Figure 11-25: vpshufhw operation

11.7.4 The shufps and shufpd Instructions

The shuffle instructions (shufps and shufpd) extract single- or double-precision values from the source operands and place them in specified positions in the destination operand. The third operand, an 8-bit immediate value, selects which values to extract from the source to move into the destination register. The syntax for these two instructions is as follows:

shufps xmmsrc1/dest, xmmsrc2/mem128, imm8

shufpd xmmsrc1/dest, xmmsrc2/mem128, imm8For the shufps instruction, the second source operand is an 8-bit immediate value that is actually a four-element array of 2-bit values.

imm8 bits 0 and 1 select a single-precision value from one of the four lanes in the XMMsrc1/dest operand to store into lane 0 of the destination operation. Bits 2 and 3 select a single-precision value from one of the four lanes in the XMMsrc1/dest operand to store into lane 1 of the destination operation (the destination operand is also XMMsrc1/dest).

imm8 bits 4 and 5 select a single-precision value from one of the four lanes in the XMMsrc2/memsrc2 operand to store into lane 2 of the destination operation. Bits 6 and 7 select a single-precision value from one of the four lanes in the XMMsrc2/memsrc2 operand to store into lane 3 of the destination operation.

Figure 11-26 shows the operation of the shufps instruction.

Figure 11-26: shufps operation

For example, the instruction

shufps xmm0, xmm1, 0E4h ; 0E4h = 11 10 01 00loads XMM0 with the following single-precision values:

- XMM0[0 to 31] from XMM0[0 to 32]

- XMM0[32 to 63] from XMM0[32 to 63]

- XMM0[64 to 95] from XMM1[63 to 95]

- XMM0[96 to 127] from XMM1[96 to 127]

If the second operand (XMMsrc2/memsrc2) is the same as the first operand (XMMsrc1/dest), it’s possible to rearrange the four single-precision values in the XMMdest register (which is probably the source of the instruction name shuffle).

The shufpd instruction works similarly, shuffling double-precision values. As there are only two double-precision values in an XMM register, it takes only a single bit to choose between the values. Likewise, as there are only two double-precision values in the destination register, the instruction requires only two (single-bit) array elements to choose the destination. As a result, the third operand, the imm8 value, is actually just a 2-bit value; the instruction ignores bits 2 to 7 in the imm8 operand. Bit 0 of the imm8 operand selects either lane 0 and bits 0 to 63 (if it is 0) or lane 1 and bits 64 to 127 (if it is 1) from the XMMsrc1/dest operand to place into lane 0 and bits 0 to 63 of XMMdest. Bit 1 of the imm8 operand selects either lane 0 and bits 0 to 63 (if it is 0) or lane 1 and bits 64 to 127 (if it is 1) from the XMMsrc/mem128 operand to place into lane 1 and bits 64 to 127 of XMMdest. Figure 11-27 shows this operation.

Figure 11-27: shufpd operation

11.7.5 The vshufps and vshufpd Instructions

The vshufps and vshufpd instructions are similar to shufps and shufpd. They allow you to shuffle the values in 128-bit XMM registers or 256-bit YMM registers.8 The vshufps and vshufpd instructions have four operands: a destination XMM or YMM register, two source operands (src1 must be an XMM or a YMM register, and src2 can be an XMM or a YMM register or a 128- or 256-bit memory location), and an imm8 operand. Their syntax is the following:

vshufps xmmdest, xmmsrc1, xmmsrc2/mem128, imm8

vshufpd xmmdest, xmmsrc1, xmmsrc2/mem128, imm8

vshufps ymmdest, ymmsrc1, ymmsrc2/mem256, imm8

vshufpd ymmdest, ymmsrc1, ymmsrc2/mem256, imm8Whereas the SSE shuffle instructions use the destination register as an implicit source operand, the AVX shuffle instructions allow you to specify explicit destination and source operands (they can all be different, or all the same, or any combination thereof).

For the 256-bit vshufps instructions, the imm8 operand is an array of four 2-bit values (bits 0:1, 2:3, 4:5, and 6:7). These 2-bit values select one of four single-precision values from the source locations, as described in Table 11-5.

Table 11-5: vshufps Destination Selection

| Destination | imm8 value | ||||

| imm8 bits | 00 | 01 | 10 | 11 | |

| 76 54 32 10 | Dest[0 to 31] | Src1[0 to 31] | Src1[32 to 63] | Src1[64 to 95] | Src1[96 to 127] |

| Dest[128 to 159] | Src1[128 to 159] | Src1[160 to 191] | Src1[192 to 223] | Src1[224 to 255] | |

| 76 54 32 10 | Dest[32 to 63] | Src1[0 to 31] | Src1[32 to 63] | Src1[64 to 95] | Src1[96 to 127] |

| Dest[160 to 191] | Src1[128 to 159] | Src1[160 to 191] | Src1[192 to 223] | Src1[224 to 255] | |

| 76 54 32 10 | Dest[64 to 95] | Src2[0 to 31] | Src2[32 to 63] | Src2[64 to 95] | Src2[96 to 127] |

| Dest[192 to 223] | Src2[128 to 159] | Src2[160 to 191] | Src2[192 to 223] | Src2[224 to 255] | |

| 76 54 32 10 | Dest[96 to 127] | Src2[0 to 31] | Src2[32 to 63] | Src2[64 to 95] | Src2[96 to 127] |

| Dest[224 to 255] | Src2[128 to 159] | Src2[160 to 191] | Src2[192 to 223] | Src2[224 to 255] | |

If both source operands are the same, you can shuffle around the single-precision values in any order you choose (and if the destination and both source operands are the same, you can arbitrarily shuffle the dwords within that register).

The vshufps instruction also allows you to specify XMM and 128-bit memory operands. In this form, it behaves quite similarly to the shufps instruction except that you get to specify two different 128-bit source operands (rather than only one 128-bit source operand), and it zeroes the HO 128 bits of the corresponding YMM register. If the destination operand is different from the first source operand, this can be useful. If the vshufps’s first source operand is the same XMM register as the destination operand, you should use the shufps instruction as its machine encoding is shorter.

The vshufpd instruction is an extension of shufpd to 256 bits (plus the addition of a second source operand). As there are four double-precision values present in a 256-bit YMM register, vshufpd needs 4 bits to select the source indexes (rather than the 2 bits that shufpd requires). Table 11-6 describes how vshufpd copies the data from the source operands to the destination operand.

Table 11-6: vshufpd Destination Selection

| Destination | imm8 value | ||

| imm8 bits | 0 | 1 | |

| 7654 3 2 1 0 | Dest[0 to 63] | Src1[0 to 63] | Src1[64 to 127] |

| 7654 3 2 1 0 | Dest[64 to 127] | Src2[0 to 63] | Src2[64 to 127] |

| 7654 3 2 1 0 | Dest[128 to 191] | Src1[128 to 191] | Src1[192 to 255] |

| 7654 3 2 1 0 | Dest[192 to 255] | Src2[128 to 191] | Src2[192 to 255] |

Like the vshufps instruction, vshufpd also allows you to specify XMM registers if you want a three-operand version of shufpd.

11.7.6 The (v)unpcklps, (v)unpckhps, (v)unpcklpd, and (v)unpckhpd Instructions

The unpack (and merge) instructions are a simplified variant of the shuffle instructions. These instructions copy single- and double-precision values from fixed locations in their source operands and insert those values into fixed locations in the destination operand. They are, essentially, shuffle instructions without the imm8 operand and with fixed shuffle patterns.

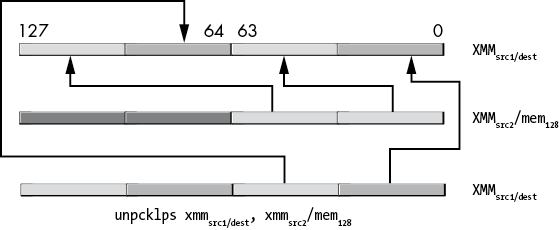

The unpcklps and unpckhps instructions choose half their single-precision operands from one of two sources, merge these values (interleaving them), and then store the merged result into the destination operand (which is the same as the first source operand). The syntax for these two instructions is as follows:

unpcklps xmmdest, xmmsrc/mem128

unpckhps xmmdest, xmmsrc/mem128The XMMdest operand serves as both the first source operand and the destination operand. The XMMsrc/mem128 operand is the second source operand.

The difference between the two is the way they select their source operands. The unpcklps instruction copies the two LO single-precision values from the source operand to bit positions 32 to 63 (dword 1) and 96 to 127 (dword 3). It leaves dword 0 in the destination operand alone and copies the value originally in dword 1 to dword 2 in the destination. Figure 11-28 diagrams this operation.

Figure 11-28: unpcklps instruction operation

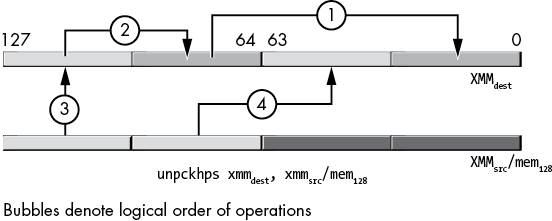

The unpckhps instruction copies the two HO single-precision values from the two sources to the destination register, as shown in Figure 11-29.

Figure 11-29: unpckhps instruction operation

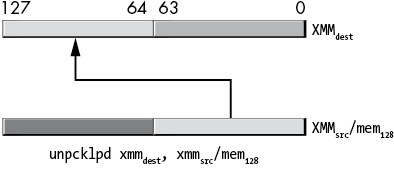

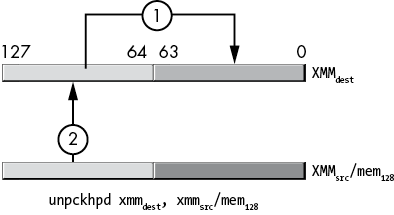

The unpcklpd and unpckhpd instructions do the same thing as unpcklps and unpckhps except, of course, they operate on double-precision values rather than single-precision values. Figures 11-30 and 11-31 show the operation of these two instructions.

Figure 11-30: unpcklpd instruction operation

Figure 11-31: unpckhpd instruction operation

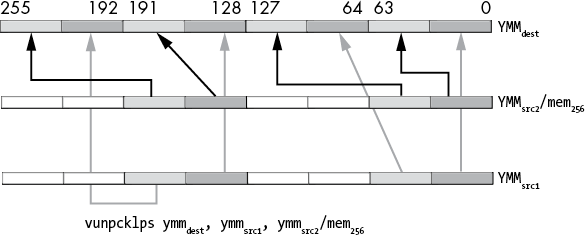

The vunpcklps, vunpckhps, vunpcklpd, and vunpckhpd instructions have the following syntax:

vunpcklps xmmdest, xmmsrc1, xmmsrc2/mem128

vunpckhps xmmdest, xmmsrc1, xmmsrc2/mem128

vunpcklps ymmdest, ymmsrc1, ymmsrc2/mem256

vunpckhps ymmdest, ymmsrc1, ymmsrc2/mem256They work similarly to the non-v variants, with a couple of differences:

- The AVX variants support using the YMM registers as well as the XMM registers.

- The AVX variants require three operands. The first (destination) and second (source1) operands must be XMM or YMM registers. The third (source2) operand can be an XMM or a YMM register or a 128- or 256-bit memory location. The two-operand form is just a special case of the three-operand form, where the first and second operands specify the same register name.

- The 128-bit variants zero out the HO bits of the YMM register rather than leaving those bits unchanged.

Of course, the AVX instructions with the YMM registers interleave twice as many single- or double-precision values. The interleaving extension happens in the intuitive way, with vunpcklps (Figure 11-32):

- The single-precision values in source1, bits 0 to 31, are first written to bits 0 to 31 of the destination.

- The single-precision values in source2, bits 0 to 31, are written to bits 32 to 63 of the destination.

- The single-precision values in source1, bits 32 to 63, are written to bits 64 to 95 of the destination.

- The single-precision values in source2, bits 32 to 63, are written to bits 96 to 127 of the destination.

- The single-precision values in source1, bits 128 to 159, are first written to bits 128 to 159 of the destination.

- The single-precision values in source2, bits 128 to 159, are written to bits 160 to 191 of the destination.

- The single-precision values in source1, bits 160 to 191, are written to bits 192 to 223 of the destination.

- The single-precision values in source2, bits 160 to 191, are written to bits 224 to 256 of the destination.

Figure 11-32: vunpcklps instruction operation

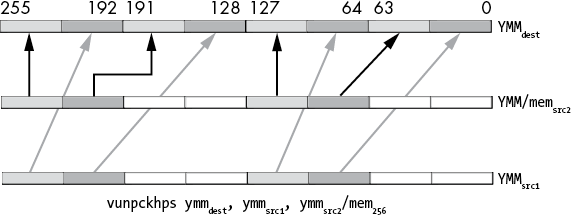

The vunpckhps instruction (Figure 11-33) does the following:

- The single-precision values in source1, bits 64 to 95, are first written to bits 0 to 31 of the destination.

- The single-precision values in source2, bits 64 to 95, are written to bits 32 to 63 of the destination.

- The single-precision values in source1, bits 96 to 127, are written to bits 64 to 95 of the destination.

- The single-precision values in source2, bits 96 to 127, are written to bits 96 to 127 of the destination.

Figure 11-33: vunpckhps instruction operation

Likewise, vunpcklpd and vunpckhpd move double-precision values.

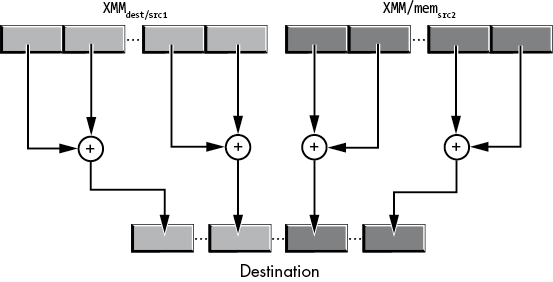

11.7.7 The Integer Unpack Instructions

The punpck* instructions provide a set of integer unpack instructions to complement the floating-point variants. These instructions appear in Table 11-7.

Table 11-7: Integer Unpack Instructions

| Instruction | Description |

punpcklbw |

Unpacks low bytes to words |

punpckhbw |

Unpacks high bytes to words |

punpcklwd |

Unpacks low words to dwords |

punpckhwd |

Unpacks high words to dwords |

punpckldq |

Unpacks low dwords to qwords |

punpckhdq |

Unpacks high dwords to qwords |

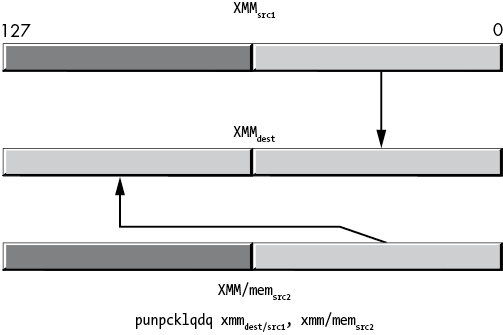

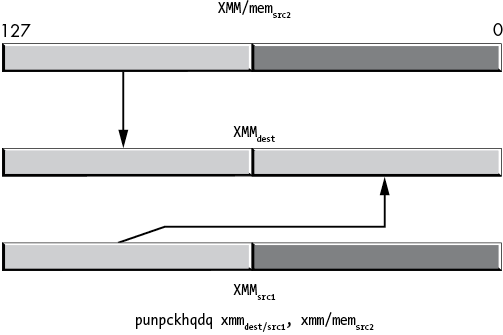

punpcklqdq |

Unpacks low qwords to owords (double qwords) |

punpckhqdq |

Unpacks high qwords to owords (double qwords) |

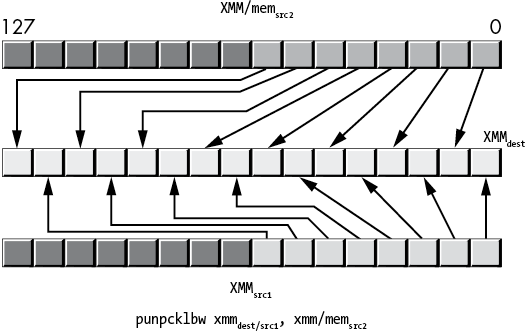

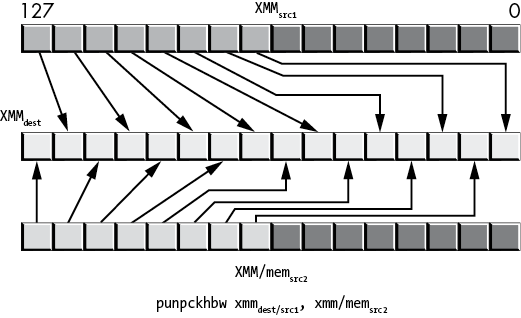

11.7.7.1 The punpck* Instructions

The punpck* instructions extract half the bytes, words, dwords, or qwords from two different sources and merge these values into a destination SSE register. The syntax for these instructions is shown here:

punpcklbw xmmdest, xmmsrc

punpcklbw xmmdest, memsrc

punpckhbw xmmdest, xmmsrc

punpckhbw xmmdest, memsrc

punpcklwd xmmdest, xmmsrc

punpcklwd xmmdest, memsrc

punpckhwd xmmdest, xmmsrc

punpckhwd xmmdest, memsrc

punpckldq xmmdest, xmmsrc

punpckldq xmmdest, memsrc

punpckhdq xmmdest, xmmsrc

punpckhdq xmmdest, memsrc

punpcklqdq xmmdest, xmmsrc

punpcklqdq xmmdest, memsrc

punpckhqdq xmmdest, xmmsrc

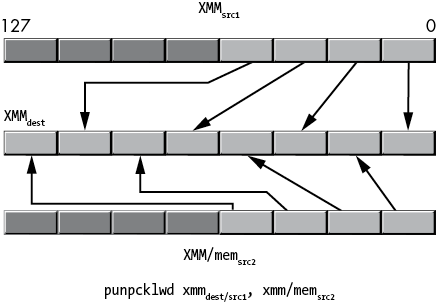

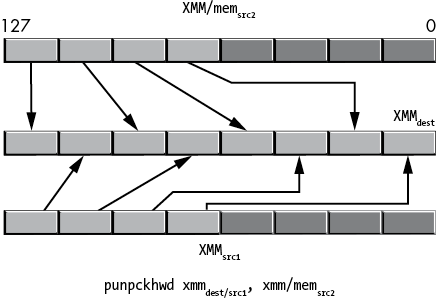

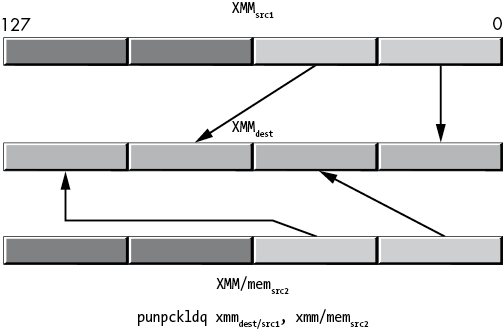

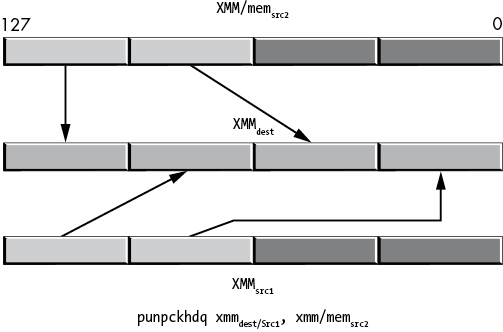

punpckhqdq xmmdest, memsrcFigures 11- 34 through 11-41 show the data transfers for each of these instructions.

Figure 11-34: punpcklbw instruction operation

Figure 11-35: punpckhbw operation

Figure 11-36: punpcklwd operation

Figure 11-37: punpckhwd operation

Figure 11-38: punpckldq operation

Figure 11-39: punpckhdq operation

Figure 11-40: punpcklqdq operation

Figure 11-41: punpckhqdq operation

11.7.7.2 The vpunpck* SSE Instructions

The AVX vpunpck* instructions provide a set of AVX integer unpack instructions to complement the SSE variants. These instructions appear in Table 11-8.

Table 11-8: AVX Integer Unpack Instructions

| Instruction | Description |

vpunpcklbw |

Unpacks low bytes to words |

vpunpckhbw |

Unpacks high bytes to words |

vpunpcklwd |

Unpacks low words to dwords |

vpunpckhwd |

Unpacks high words to dwords |

vpunpckldq |

Unpacks low dwords to qwords |

vpunpckhdq |

Unpacks high dwords to qwords |

vpunpcklqdq |

Unpacks low qwords to owords (double qwords) |

vpunpckhqdq |

Unpacks high qwords to owords (double qwords) |

The vpunpck* instructions extract half the bytes, words, dwords, or qwords from two different sources and merge these values into a destination AVX or SSE register. Here is the syntax for the SSE forms of these instructions:

vpunpcklbw xmmdest, xmmsrc1, xmmsrc2/mem128

vpunpckhbw xmmdest, xmmsrc1, xmmsrc2/mem128

vpunpcklwd xmmdest, xmmsrc1, xmmsrc2/mem128

vpunpckhwd xmmdest, xmmsrc1, xmmsrc2/mem128

vpunpckldq xmmdest, xmmsrc1, xmmsrc2/mem128

vpunpckhdq xmmdest, xmmsrc1, xmmsrc2/mem128

vpunpcklqdq xmmdest, xmmsrc1, xmmsrc2/mem128

vpunpckhqdq xmmdest, xmmsrc1, xmmsrc2/mem128Functionally, the only difference between these AVX instructions (vunpck*) and the SSE (unpck*) instructions is that the SSE variants leave the upper bits of the YMM AVX registers (bits 128 to 255) unchanged, whereas the AVX variants zero-extend the result to 256 bits. See Figures 11-34 through 11-41 for a description of the operation of these instructions.

11.7.7.3 The vpunpck* AVX Instructions

The AVX vunpck* instructions also support the use of the AVX YMM registers, in which case the unpack and merge operation extends from 128 bits to 256 bits. The syntax for these instructions is as follows:

vpunpcklbw ymmdest, ymmsrc1, ymmsrc2/mem256

vpunpckhbw ymmdest, ymmsrc1, ymmsrc2/mem256

vpunpcklwd ymmdest, ymmsrc1, ymmsrc2/mem256

vpunpckhwd ymmdest, ymmsrc1, ymmsrc2/mem256

vpunpckldq ymmdest, ymmsrc1, ymmsrc2/mem256

vpunpckhdq ymmdest, ymmsrc1, ymmsrc2/mem256

vpunpcklqdq ymmdest, ymmsrc1, ymmsrc2/mem256

vpunpckhqdq ymmdest, ymmsrc1, ymmsrc2/mem25611.7.8 The (v)pextrb, (v)pextrw, (v)pextrd, and (v)pextrq Instructions

The (v)pextrb, (v)pextrw, (v)pextrd, and (v)pextrq instructions extract a byte, word, dword, or qword from a 128-bit XMM register and copy this data to a general-purpose register or memory location. The syntax for these instructions is the following:

pextrb reg32, xmmsrc, imm8 ; imm8 = 0 to 15

pextrb reg64, xmmsrc, imm8 ; imm8 = 0 to 15

pextrb mem8, xmmsrc, imm8 ; imm8 = 0 to 15

vpextrb reg32, xmmsrc, imm8 ; imm8 = 0 to 15

vpextrb reg64, xmmsrc, imm8 ; imm8 = 0 to 15

vpextrb mem8, xmmsrc, imm8 ; imm8 = 0 to 15

pextrw reg32, xmmsrc, imm8 ; imm8 = 0 to 7

pextrw reg64, xmmsrc, imm8 ; imm8 = 0 to 7

pextrw mem16, xmmsrc, imm8 ; imm8 = 0 to 7

vpextrw reg32, xmmsrc, imm8 ; imm8 = 0 to 7

vpextrw reg64, xmmsrc, imm8 ; imm8 = 0 to 7

vpextrw mem16, xmmsrc, imm8 ; imm8 = 0 to 7

pextrd reg32, xmmsrc, imm8 ; imm8 = 0 to 3

pextrd mem32, xmmsrc, imm8 ; imm8 = 0 to 3

vpextrd mem64, xmmsrc, imm8 ; imm8 = 0 to 3

vpextrd reg32, xmmsrc, imm8 ; imm8 = 0 to 3

vpextrd reg64, xmmsrc, imm8 ; imm8 = 0 to 3

vpextrd mem32, xmmsrc, imm8 ; imm8 = 0 to 3

pextrq reg64, xmmsrc, imm8 ; imm8 = 0 to 1

pextrq mem64, xmmsrc, imm8 ; imm8 = 0 to 1

vpextrq reg64, xmmsrc, imm8 ; imm8 = 0 to 1